I've been working on a little idea where I'd have an app (maybe a mobile app with Xamarin or maybe a SPA, I haven't decided yet) for the easily accessing and searching across the 500+ videos from http://friday.azure.com.

I've been working on a little idea where I'd have an app (maybe a mobile app with Xamarin or maybe a SPA, I haven't decided yet) for the easily accessing and searching across the 500+ videos from http://friday.azure.com.

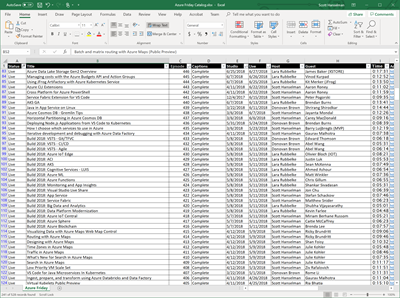

HOWEVER. I don't have access to the database that hosts the metadata and while I'm trying to get at least read-only access to it (long story) the best I can do is a giant Excel spreadsheet dump that I was given that has all the video details.

This, of course, is sub-optimal, but regardless of how you feel about it, it's a database. Or, a data source at the very least! Additionally, since it was always going to end up as JSON in a cached in-memory database regardless, it doesn't matter much to me.

In real-world business scenarios, sometimes the authoritative source is an Excel sheet, sometimes it's a SQL database, and sometimes it's a flat file. Who knows?

What's most important (after clean data) is that the process one builds around that authoritative source is reliable and repeatable. For example, if I want to build a little app or one page website, yes, ideally I'd have a direct connection to the SQL back end. Other alternative sources could be a JSON file sitting on a simple storage endpoint accessible with a single HTTP GET. If the Excel sheet is on OneDrive/SharePoint/DropBox/whatever, I could have a small serverless function run when the files changes (or on a daily schedule) that would convert the Excel sheet into a JSON file and drop that file onto storage. Hopefully you get the idea. The goal here is clean, reliable pragmatism. I'll deal with the larger business process issue and/or system architecture and/or permissions issue later. For now the "interface" for my app is JSON.

So I need some JSON and I have this Excel sheet.

Turns out there's a lovely open source project and NuGet package called ExcelDataReader. There's been ways to get data out of Excel for decades. Literally decades. One of my first jobs was automating Microsoft Excel with Visual Basic 3.0 with COM Automation. I even blogged about getting data out of Excel into ASP.NET 16 years ago!

Today I'll use ExcelDataReader. It's really nice and it took less than an hour to get exactly what I wanted. I haven't gone and made it super clean and generic, refactored out a bunch of helper functions, so I'm interested in your thoughts. After I get this tight and reliable I'll drop it into an Azure Function and then focus on getting the JSON directly from the source.

A few gotchas that surprised me. I got a "System.NotSupportedException: No data is available for encoding 1252." Windows-1252 or CP-1252 (code page) is an old school text encoding (it's effectively ISO 8859-1). Turns out newer .NETs like .NET Core need the System.Text.Encoding.CodePages package as well as a call to System.Text.Encoding.RegisterProvider(System.Text.CodePagesEncodingProvider.Instance); to set it up for success. Also, that extra call to reader.Read at the start to skip over the Title row had me pause a moment.

using System;

using System.IO;

using ExcelDataReader;

using System.Text;

using Newtonsoft.Json;

namespace AzureFridayToJson

{

class Program

{

static void Main(string[] args)

{

Encoding.RegisterProvider(CodePagesEncodingProvider.Instance);

var inFilePath = args[0];

var outFilePath = args[1];

using (var inFile = File.Open(inFilePath, FileMode.Open, FileAccess.Read))

using (var outFile = File.CreateText(outFilePath))

{

using (var reader = ExcelReaderFactory.CreateReader(inFile, new ExcelReaderConfiguration()

{ FallbackEncoding = Encoding.GetEncoding(1252) }))

using (var writer = new JsonTextWriter(outFile))

{

writer.Formatting = Formatting.Indented; //I likes it tidy

writer.WriteStartArray();

reader.Read(); //SKIP FIRST ROW, it's TITLES.

do

{

while (reader.Read())

{

//peek ahead? Bail before we start anything so we don't get an empty object

var status = reader.GetString(0);

if (string.IsNullOrEmpty(status)) break;

writer.WriteStartObject();

writer.WritePropertyName("Status");

writer.WriteValue(status);

writer.WritePropertyName("Title");

writer.WriteValue(reader.GetString(1));

writer.WritePropertyName("Host");

writer.WriteValue(reader.GetString(6));

writer.WritePropertyName("Guest");

writer.WriteValue(reader.GetString(7));

writer.WritePropertyName("Episode");

writer.WriteValue(Convert.ToInt32(reader.GetDouble(2)));

writer.WritePropertyName("Live");

writer.WriteValue(reader.GetDateTime(5));

writer.WritePropertyName("Url");

writer.WriteValue(reader.GetString(11));

writer.WritePropertyName("EmbedUrl");

writer.WriteValue($"{reader.GetString(11)}player");

/*

<iframe src="https://channel9.msdn.com/Shows/Azure-Friday/Erich-Gamma-introduces-us-to-Visual-Studio-Online-integrated-with-the-Windows-Azure-Portal-Part-1/player" width="960" height="540" allowFullScreen frameBorder="0"></iframe>

*/

writer.WriteEndObject();

}

} while (reader.NextResult());

writer.WriteEndArray();

}

}

}

}

}

The first pass is on GitHub at https://github.com/shanselman/AzureFridayToJson and the resulting JSON looks like this:

[

{

"Status": "Live",

"Title": "Introduction to Azure Integration Service Environment for Logic Apps",

"Host": "Scott Hanselman",

"Guest": "Kevin Lam",

"Episode": 528,

"Live": "2019-02-26T00:00:00",

"Url": "https://azure.microsoft.com/en-us/resources/videos/azure-friday-introduction-to-azure-integration-service-environment-for-logic-apps",

"embedUrl": "https://azure.microsoft.com/en-us/resources/videos/azure-friday-introduction-to-azure-integration-service-environment-for-logic-appsplayer"

},

{

"Status": "Live",

"Title": "An overview of Azure Integration Services",

"Host": "Lara Rubbelke",

"Guest": "Matthew Farmer",

"Episode": 527,

"Live": "2019-02-22T00:00:00",

"Url": "https://azure.microsoft.com/en-us/resources/videos/azure-friday-an-overview-of-azure-integration-services",

"embedUrl": "https://azure.microsoft.com/en-us/resources/videos/azure-friday-an-overview-of-azure-integration-servicesplayer"

},

...SNIP...

Thoughts? There's a dozen ways to have done this. How would you do this? Dump it into a DataSet and serialize objects to JSON, make an array and do the same, automate Excel itself (please don't do this), and on and on.

Certainly this would be easier if I could get a CSV file or something from the business person, but the issue is that I'm regularly getting new drops of this same sheet with new records added. Getting the suit to Save As | CSV reliably and regularly isn't sustainable.

Sponsor: Stop wasting time trying to track down the cause of bugs. Sentry.io provides full stack error tracking that lets you monitor and fix problems in real time. If you can program it, we can make it far easier to fix any errors you encounter with it.

© 2018 Scott Hanselman. All rights reserved.