This blog post was co-authored by Liz Yu (Marketing), Bryden Oliver (Architect), Iain Shepard (Senior Software Engineer) at Spotlight Cloud, and Deborah Chen (Program Manager), Sri Chintala (Program Manager) at Azure Cosmos DB.

Spotlight Cloud is the first built on Azure database performance monitoring solution focused on SQL Server customers. Leveraging the scalability, performance, global distribution, high-availability, and built-in security of Microsoft Azure Cosmos DB, Spotlight Cloud combines the best of the cloud with Quest Software’s engineering insights from years of building database performance management tools.

As a tool that delivers database insights that lead customers to higher availability, scalability, and faster resolution of their SQL solutions, Spotlight Cloud needed a database service that provided those exact requirements on the backend as well.

Using Azure Cosmos DB and Azure Functions, Quest was able to build a proof of concept within two months and deploy to production in less than eight months.

“Azure Cosmos DB will allow us to scale as our application scales. As we onboard more customers, we value the predictability in terms of performance, latency, and the availability we get from Azure Cosmos DB.”

- Patrick O’Keeffe, VP of Software Engineering, Quest Software

Spotlight Cloud requirements

The amount of data needed to support a business continually grows. As data scales, so does Spotlight Cloud, as it needs to analyze all that data. Quest’s developers knew they needed a highly available database service with the following requirements and at affordable cost:

- Collect and store many different types of data and send it to an Azure-based storage service. The data comes from SQL Server DMVs, OS performance counter statistics, SQL plans, and other useful information. The data collected varies greatly in size (100 bytes to multiple megabytes) and shape.

- Accept 1,200 operations/second on the data with the ability to continue to scale as more customers use Spotlight Cloud.

- Query and return data to aid in the diagnosis and analysis of SQL Server performance problems quickly.

After a thorough evaluation of many products, Quest chose Azure Functions and Azure Cosmos DB as the backbone of their solution. Spotlight Cloud was able to leverage both Azure Function apps and Azure Cosmos DB to reduce cost, improve performance, and deliver a better service to their customers.

Solution

Part of the core data flow in Spotlight Cloud. Other technologies used, not shown, include Event Hub, Application Insights, Key Vault, Storage, DNS.

The core data processing flow within Spotlight Cloud is built on Azure Functions and Azure Cosmos DB. This technology stack provides Quest with the high scale and performance they need.

|

Scale

|

Ingest apps handle >1,000 sets of customer monitoring data per second. To support this, Azure Functions consumption plan auto-scales up to 100s of VMs automatically. Azure Cosmos DB provides guaranteed throughput for database and containers, measured in Request Units / second (RU/s), and backed by SLAs. By estimating the required throughput of the workload and translating it to RU/s, Quest was able to achieve predictable throughput of reads and writes against Azure Cosmos DB at any scale. |

|

Performance

|

Azure Cosmos DB handles the write and read operations for Spotlight’s data at < 60 milliseconds. This enables customers’ SQL Server data to be quickly ingested and available for analysis in near real time. |

|

High availability

|

Azure Cosmos DB provides 99.999% high availability SLA for reads and writes, when using 2+ regions. Availability is crucial for Spotlight Cloud’s customers, as many are in the healthcare, retail, and financial services industries and cannot afford to experience any database downtime or performance degradation. In the event a failover is needed, Azure Cosmos DB does automatic failover with no manual intervention, enabling business continuity. With turnkey global distribution, Azure Cosmos DB handles automatic and asynchronous replication of data between regions. To take full advantage of their provisioned throughput, Quest designated one region to handle writes (data ingest) and another for reads. As a result, users’ read response times are never impacted by the write volume. |

|

Flexible schema

|

Azure Cosmos DB accepts JSON data of varying size and schema. This enabled Quest to store a variety of data from diverse sources, such as SQL Server DMVs, OS performance counter statistics, etc., and removed the need to worry about fixed schemas or schema management. |

|

Developer productivity

|

Azure Functions tooling made the development and coding process very smooth, which enabled developers to be productive immediately. Developers also found Azure Cosmos DB’s SQL query language to be easy to use, reducing the ramp-up time. |

|

Cost

|

The Azure Functions consumption pricing model charges only for the compute and memory each function invocation uses. Particularly for lower-volume microservices, this lets users operate at low cost. In addition, using Azure Functions on a consumption plan gives Quest the ability to have failover instances on standby at all times, and only incur cost if failover instances are actually used. From a Total Cost of Ownership (TCO) perspective, Azure Cosmos DB and Azure Functions are both managed solutions, which reduced the amount of time spent on management and operations. This enabled the team to focus on building services that deliver direct value to their customers. |

|

Support |

Microsoft engineers are directly available to help with issues, provide guidance and share best practices |

With Spotlight Cloud, Quest’s customers have the advantage of storing data in Azure instead of an on-premises SQL Server database. Customers also have access to all the analysis features that Quest provides in the cloud. For example, a customer can investigate the SQL workload and performance on their SQL Server in great detail to optimize the data and queries for their users - all powered by Spotlight Cloud running on top of Azure Cosmos DB.

"We were looking to upgrade our storage solution to better meet our business needs. Azure Cosmos DB gave us built-in high availability and low latency, which allowed us to improve our uptime and performance. I believe Azure Cosmos DB plays an important role in our Spotlight Cloud to enable customers to access real-time data fast."

- Efim Dimenstein, Chief Cloud Architect, Quest Software

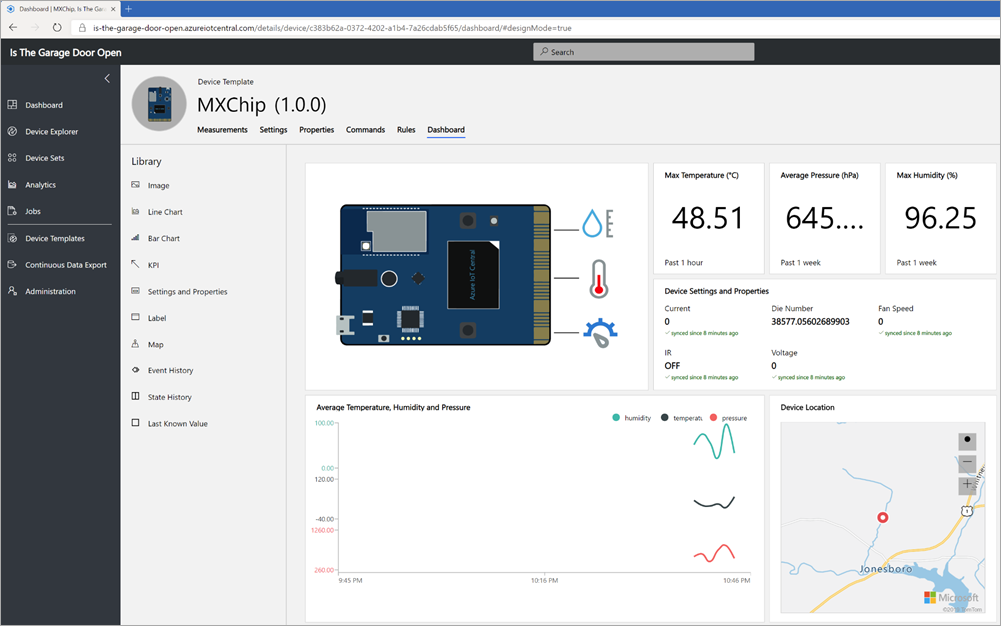

Deployment Diagram of Spotlight Cloud’s Ingest and Egress app

Diagram above explained. Data is routed to an available ingest app by the Traffic Manager. The Ingest app writes data into the Azure Cosmos DB write region. Data consumers are routed via Traffic Manager to Egress app, which then reads data from the Azure Cosmos DB read region.

Learnings and best practices

In building Spotlight Cloud, Quest gained a deep understanding into how to use Azure Cosmos DB in the most effective way:

|

Understand Azure Cosmos DB’s provisioned throughput model (RU/s)

|

Quest measured the cost of each operation, the number of operations/second, and provisioned the total amount of throughput required in Azure Cosmos DB. Since Azure Cosmos DB cost is based on storage and provisioned throughput, choosing the right amount of RUs was key to using Azure Cosmos DB in a cost effective manner. |

|

Choose a good partition strategy

|

Quest chose a partition key for their data that resulted in a balanced distribution of request volume and storage. This is critical because Azure Cosmos DB shards data horizontally and distributes total provisioned RUs evenly among the partitions of data. During the development stage, Quest experimented with several choices of partition key and measured the impact on the performance. If a partition key strategy was unbalanced, a workload would require more RUs than with a balanced partition strategy. Quest chose a synthetic partition key that incorporated Server Id and type of data being stored. This gave a high number of distinct values (high cardinality), leading to an even distribution of data - crucial for a write heavy workload. |

|

Tune indexing policy

|

For Quest’s write-heavy workload, tuning index policy and RU cost on writes was key to achieving good performance. To do this, Quest modified the Azure Cosmos DB indexing policy to explicitly index commonly queried properties in a document and exclude the rest. In addition, Quest included only a few commonly used properties in the body of the document and encoded the rest of the data into a single property. |

|

Scale up and down RUs based on data access pattern

|

In Spotlight Cloud, customers tend to access recent data more frequently than the older data. At the same time, new data continues to be written in a steady stream, making it a write-heavy workload. To tune the overall provisioned RUs of the workload, Quest split the data into multiple containers. A new container is created regularly (e.g. every week to a few months) with high RUs, ready to receive writes. Once the next new container is ready, the previous container’s RUs is reduced to only what is required to serve the expected read operations. Writes are then directed to the new container with high number of RUs. |

Tour of Spotlight Cloud’s user interface

About Quest

Quest has provided software solutions for the fast paced world of enterprise IT since 1987. They are a global provider to 130,000 companies across 100 countries, including 95 percent of the Fortune 500 and 90% of the Global 1000.

Find out more about Spotlight Cloud on Twitter, Facebook, and LinkedIn.

![Spark job progress indicator_thumb[2] Spark job progress indicator_thumb[2]](http://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/c87efc15-c26e-47d0-8f0e-07568d50ab49.gif)

![Native matplotlib support for PySpark DataFrame_thumb[2] Native matplotlib support for PySpark DataFrame_thumb[2]](http://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/88f0e5ea-deff-4d60-b1e5-b65ec5b7adaf.png)

![Cell execution status indicator_thumb[4] Cell execution status indicator_thumb[4]](http://azurecomcdn.azureedge.net/mediahandler/acomblog/media/Default/blog/48f8bf95-01f0-4a1d-a07f-9e767f9fc253.png)

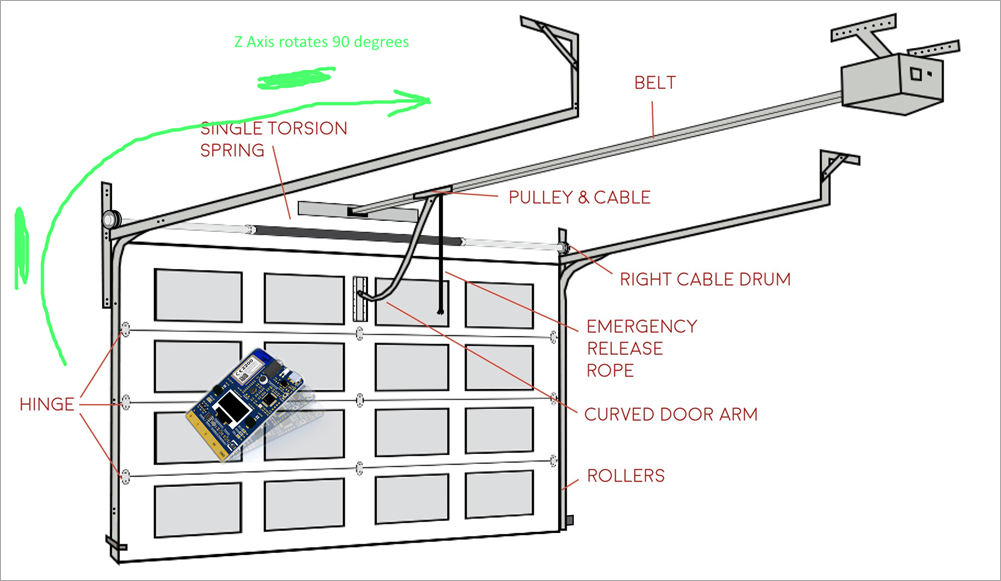

For whatever reason when a programmer tries something out for the first time, they write a "Hello World!" application. In the IoT (Internet of Things) world of devices, it's always fun to make an LED blink as a good getting started sample project.

For whatever reason when a programmer tries something out for the first time, they write a "Hello World!" application. In the IoT (Internet of Things) world of devices, it's always fun to make an LED blink as a good getting started sample project._d2226a80-7b82-4883-98f5-1c6e7d5d8822.png)

_2081bc54-15c9-4122-9edf-84d205d6fce6.png)