Today, we are announcing .NET Core 3.0 Preview 5. It includes a new Json serializer, support for publishing single file executables, an update to runtime roll-forward, and changes in the BCL. If you missed it, check out the improvements we released in .NET Core 3.0 Preview 4, from last month.

Download .NET Core 3.0 Preview 5 right now on Windows, macOS and Linux.

ASP.NET Core and EF Core are also releasing updates today.

WPF and Windows Forms Update

You should see a startup performance improvement for WPF and Windows Forms. WPF and Windows Forms assemblies are now ahead-of-time compiled, with crossgen. We have seen multiple reports from the community that startup performance is significantly improved between Preview 4 and Preview 5.

We published more code for WPF as part of .NET Core 3.0 Preview 4. We expect to complete publishing WPF by Preview 7.

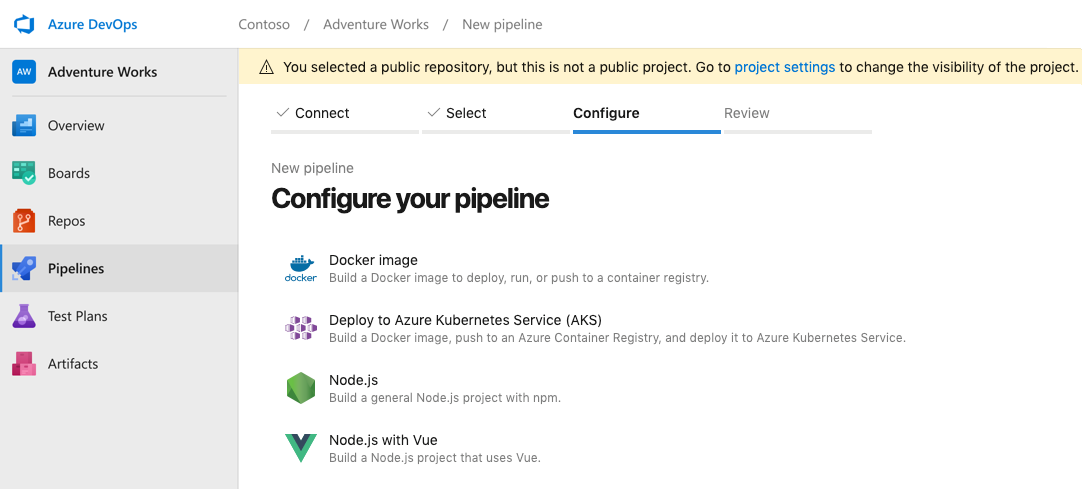

Publishing Single EXEs

You can now publish a single-file executable with dotnet publish. This form of single EXE is effectively a self-extracting executable. It contains all dependencies, including native dependencies, as resources. At startup, it copies all dependencies to a temp directory, and loads them for there. It only needs to unpack dependencies once. After that, startup is fast, without any penalty.

You can enable this publishing option by adding the PublishSingleFile property to your project file or by adding a new switch on the commandline.

To produce a self-contained single EXE application, in this case for 64-bit Windows:

dotnet publish -r win10-x64 /p:PublishSingleFile=true

Single EXE applications must be architecture specific. As a result, a runtime identifier must be specified.

See Single file bundler for more information.

Assembly trimmer, ahead-of-time compilation (via crossgen) and single file bundling are all new features in .NET Core 3.0 that can be used together or separately. Expect to hear more about these three features in future previews.

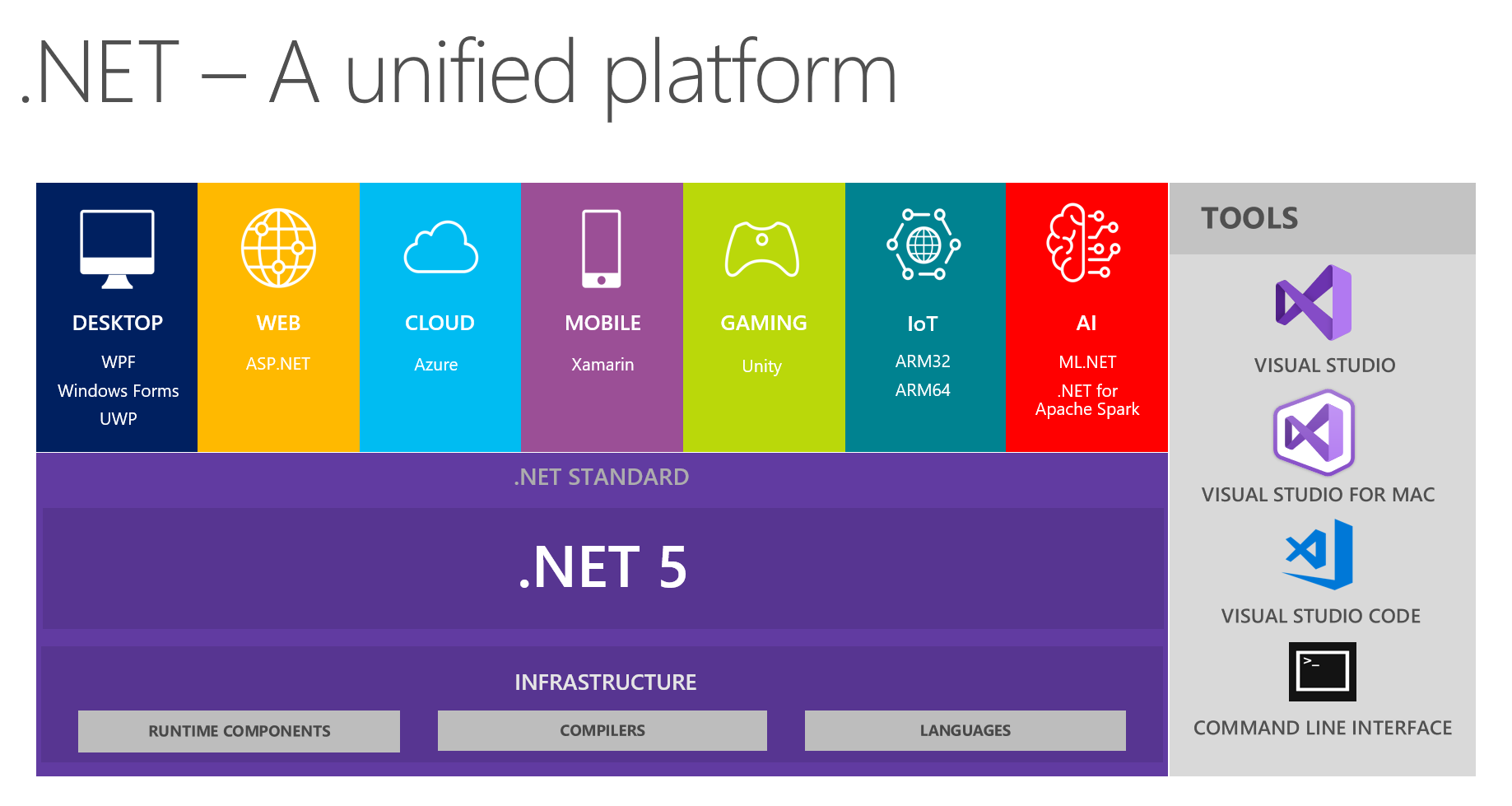

We expect that some of you will prefer single exe provided by an ahead-of-time compiler, as opposed to the self-extracting-executable approach that we are providing in .NET Core 3.0. The ahead-of-time compiler approach will be provided as part of the .NET 5 release.

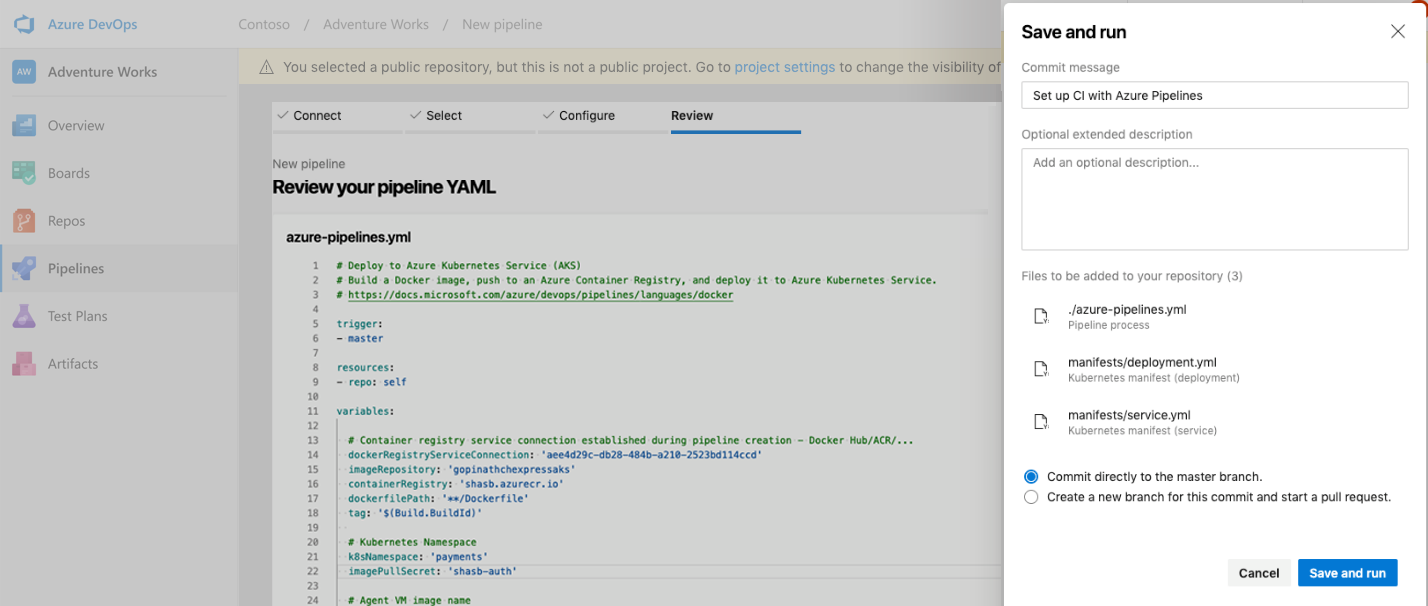

Introducing the JSON Serializer (and an update to the writer)

JSON Serializer

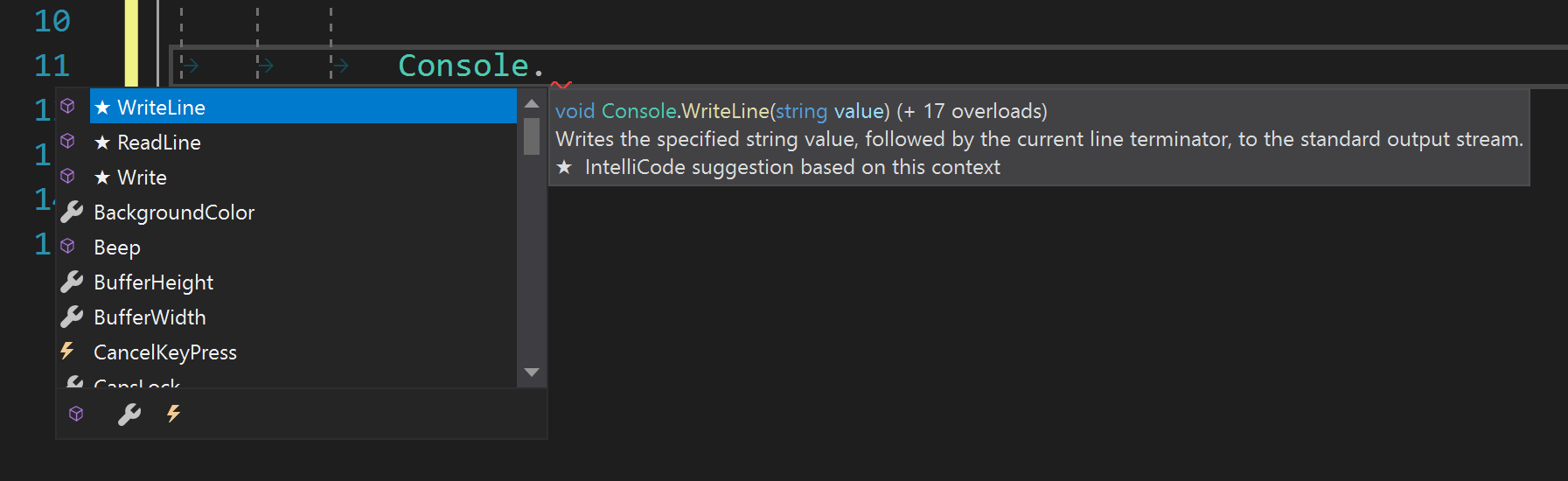

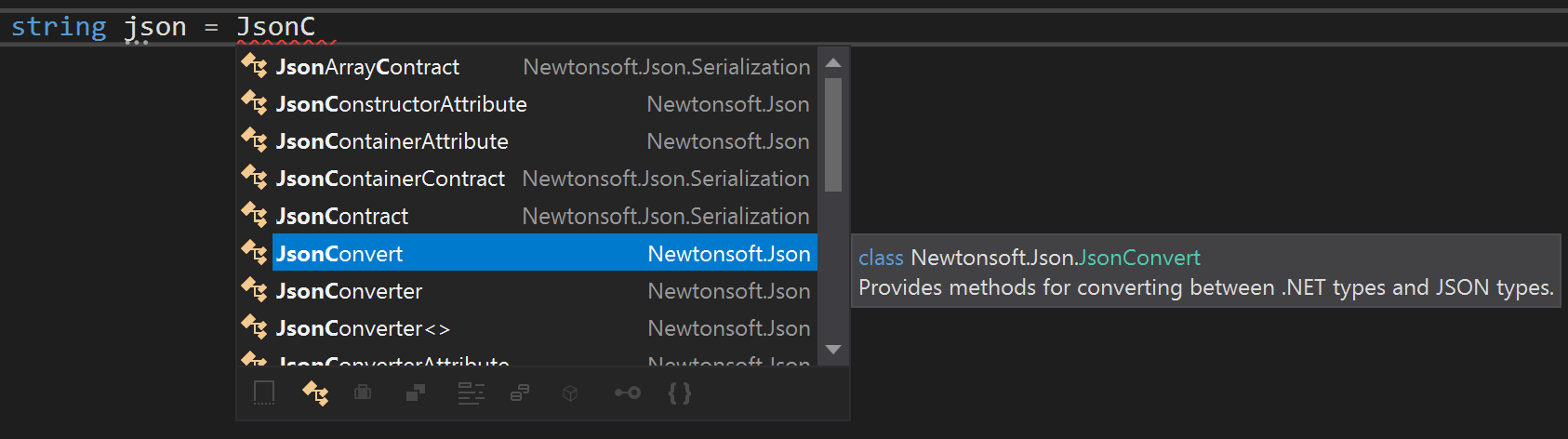

The new JSON serializer layers on top of the high-performance Utf8JsonReader and Utf8JsonWriter. It deserializes objects from JSON and serializes objects to JSON. Memory allocations are kept minimal and includes support for reading and writing JSON with Stream asynchronously.

To get started, use the JsonSerializer class in the System.Text.Json.Serialization namespace. See the documentation for information and samples. The feature set is currently being extended for future previews.

Utf8JsonWriter Design Change

Based on feedback around usability and reliability, we made a design change to the Utf8JsonWriter that was added in preview2. The writer is now a regular class, rather than a ref struct, and implements IDisposable. This allows us to add support for writing to streams directly. Furthermore, we removed JsonWriterState and now the JsonWriterOptions need to be passed-in directly to the Utf8JsonWriter, which maintains its own state. To help offset the allocation, the Utf8JsonWriter has a new Reset API that lets you reset its state and re-use the writer. We also added a built-in IBufferWriter<T> implementation called ArrayBufferWriter<T> that can be used with the Utf8JsonWriter. Here’s a code snippet that highlights the writer changes:

// New, built-in IBufferWriter<byte> that's backed by a grow-able array

var arrayBufferWriter = new ArrayBufferWriter<byte>();

// Utf8JsonWriter is now IDisposable

using (var writer = new Utf8JsonWriter(arrayBufferWriter, new JsonWriterOptions { Indented = true }))

{

// Write some JSON using existing WriteX() APIs.

writer.Flush(); // There is no isFinalBlock bool parameter anymore

}

You can read more about the design change here.

Index and Range

In the previous preview, the framework supported Index and Range by providing overloads of common operations, such as indexers and methods like Substring, that accepted Index and Range values. Based on feedback of early adopters, we decided to simplify this by letting the compiler call the existing indexers instead. The Index and Range Changes document has more details on how this works but the basic idea is that the compiler is able to call an int based indexer by extracting the offset from the given Index value. This means that indexing using Index will now work on all types that provide an indexer and have a Count or Length property. For Range, the compiler usually cannot use an existing indexer because those only return singular values. However, the compiler will now allow indexing using Range when the type either provides an indexer that accepts Range or if there is a method called Slice. This enables you to make indexing using Range also work on interfaces and types you don’t control by providing an extension method.

Existing code that uses these indexers will continue to compile and work as expected, as demonstrated by the following code.

string s = "0123456789";

char lastChar = s[^1]; // lastChar = '9'

string startFromIndex2 = s[2..]; // startFromIndex2 = "23456789"

The following String methods have been removed:

public String Substring(Index startIndex);

public String Substring(Range range);

Any code uses that uses these String methods will need to be updated to use the indexers instead

string substring = s[^10..]; // Replaces s.Substring(^10);

string substring = s[2..8]; // Replaces s.Substring(2..8);

The following Range method previously returned OffsetAndLength:

public Range.OffsetAndLength GetOffsetAndLength(int length);

It will now simply return a tuple instead:

public ValueTuple<int, int> GetOffsetAndLength(int length);

The following code sample will continue to compile and run as before:

(int offset, int length) = range.GetOffsetAndLength(20);

New Japanese Era (Reiwa)

On May 1st, 2019, Japan started a new era called Reiwa. Software that has support for Japanese calendars, like .NET Core, must be updated to accommodate Reiwa. .NET Core and .NET Framework have been updated and correctly handle Japanese date formatting and parsing with the new era.

.NET relies on operating system or other updates to correctly process Reiwa dates. If you or your customers are using Windows, download the latest updates for your Windows version. If running macOS or Linux, download and install ICU version 64.2, which has support the new Japanese era.

Handling a new era in the Japanese calendar in .NET blog has more information about the changes done in the .NET to support the new Japanese era.

Hardware Intrinsic API changes

The Avx2.ConvertToVector256* methods were changed to return a signed, rather than unsigned type. This puts them inline with the Sse41.ConvertToVector128* methods and the corresponding native intrinsics. As an example, Vector256<ushort> ConvertToVector256UInt16(Vector128<byte>) is now Vector256<short> ConvertToVector256Int16(Vector128<byte>).

The Sse41/Avx.ConvertToVector128/256* methods were split into those that take a Vector128/256<T> and those that take a T*. As an example, ConvertToVector256Int16(Vector128<byte>) now also has a ConvertToVector256Int16(byte*) overload. This was done because the underlying instruction which takes an address does a partial vector read (rather than a full vector read or a scalar read). This meant we were not able to always emit the optimal instruction coding when the user had to do a read from memory. This split allows the user to explicitly select the addressing form of the instruction when needed (such as when you don’t already have a Vector128<T>).

The FloatComparisonMode enum entries and the Sse/Sse2.Compare methods were renamed to clarify that the operation is ordered/unordered and not the inputs. They were also reordered to be more consistent across the SSE and AVX implementations. An example is that Sse.CompareEqualOrderedScalar is now Sse.CompareScalarOrderedEqual. Likewise, for the AVX versions, Avx.CompareScalar(left, right, FloatComparisonMode.OrderedEqualNonSignalling) is now Avx.CompareScalar(left, right, FloatComparisonMode.EqualOrderedNonSignalling).

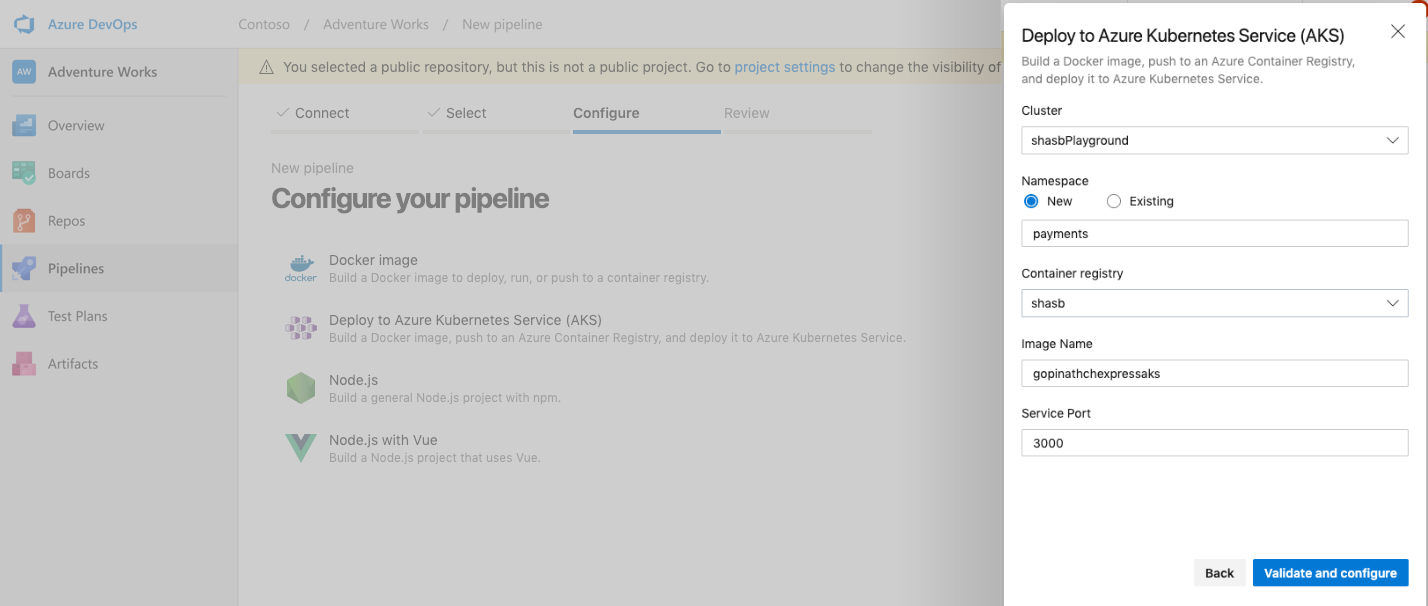

.NET Core runtime roll-forward policy update

The .NET Core runtime, actually the runtime binder, now enables major-version roll-forward as an opt-in policy. The runtime binder already enables roll-forward on patch and minor versions as a default policy. We never intend to enable major-version roll-forward as a default policy, however, it is an important for some scenarios.

We also believe that it is important to expose a comprehensive set of runtime binding configuration options to give you the control you need.

There is a new know called RollForward, which accepts the following values:

LatestPatch — Roll forward to the highest patch version. This disables minor version roll forward.Minor — Roll forward to the lowest higher minor version, if requested minor version is missing. If the requested minor version is present, then the LatestPatch policy is used. This is the default policy.Major — Roll forward to lowest higher major version, and lowest minor version, if requested major version is missing. If the requested major version is present, then the Minor policy is used.LatestMinor — Roll forward to highest minor version, even if requested minor version is present.LatestMajor — Roll forward to highest major and highest minor version, even if requested major is present.Disable — Do not roll forward. Only bind to specified version. This policy is not recommended for general use since it disable the ability to roll-forward to the latest patches. It is only recommended for testing.

See Runtime Binding Behavior and dotnet/core-setup #5691 for more information.

Making.NET Core runtime docker images for Linux smaller

We reduced the size of the runtime by about 10 MB by using a feature we call “partial crossgen”.

By default, when we ahead-of-time compile an assembly, we compile all methods. These native compiled methods increase the size of an assembly, sometimes by a lot (the cost is quite variable). In many cases, a subset, sometimes a small subset, of methods are used at startup. That means that cost and benefit and can be asymmetric. Partial crossgen enables us to pre-compile only the methods that matter.

To enable this outcome, we run several .NET Core applications and collect data about which methods are called. We call this process “training”. The training data is called “IBC”, and is used as an input to crossgen to determine which methods to compile.

This process is only useful if we train the product with representative applications. Otherwise, it can hurt startup. At present, we are targeting making Docker container images for Linux smaller. As a result, it’s only the .NET Core runtime build for Linux that is smaller and where we used partial crossgen. That enables us to train .NET Core with a smaller set of applications, because the scenario is relatively narrow. Our training has been focused on the .NET Core SDK (for example, running dotnet build and dotnet test), ASP.NET Core applications and PowerShell.

We will likely expand the use of partial crossgen in future releases.

Docker Updates

We now support Alpine ARM64 runtime images. We also switched the default Linux image to Debian 10 / Buster. Debian 10 has not been released yet. We are betting that it will be released before .NET Core 3.0.

We added support for Ubuntu 19.04 / Disco. We don’t usually add support for Ubuntu non-LTS releases. We added support for 19.04 as part of our process of being ready for Ubuntu 20.04, the next LTS release. We intend to add support for 19.10 when it is released.

We posted an update last week about using .NET Core and Docker together. These improvements are covered in more detail in that post.

AssemblyLoadContext Updates

We are continuing to improve AssemblyLoadContext. We aim to make simple plug-in models to work without much effort (or code) on your part, and to enable complex plug-in models to be possible. In Preview 5, we enabled implicit type and assembly loading via Type.GetType when the caller is not the application, like a serializer, for example.

See the AssemblyLoadContext.CurrentContextualReflectionContext design document for more information.

COM-callable managed components

You can now create COM-callable managed components, on Windows. This capability is critical to use .NET Core with COM add-in models, and also to provide parity with .NET Framework.

With .NET Framework, we used mscoree.dll as the COM server. With .NET Core, we provide a native launcher dll that gets added to the component bin directory when you build your COM component.

See COM Server Demo to try out this new capability.

GC Large page support

Large Pages (also known as Huge Pages on Linux) is a feature where the operating system is able to establish memory regions larger than the native page size (often 4K) to improve performance of the application requesting these large pages.

When a virtual-to-physical address translation occurs, a cache called the Translation lookaside buffer (TLB) is first consulted (often in parallel) to check if a physical translation for the virtual address being accessed is available to avoid doing a page-table walk which can be expensive. Each large-page translation uses a single translation buffer inside the CPU. The size of this buffer is typically three orders of magnitude larger than the native page size; this increases the efficiency of the translation buffer, which can increase performance for frequently accessed memory.

The GC can now be configured with the GCLargePages as an opt-in feature to choose to allocate large pages on Windows. Using large pages reduces TLB misses therefore can potentially increase application performance. It does, however, come with some limitations.

Closing

Thanks for trying out .NET Core 3.0. Please continue to give us feedback, either in the comments or on GitHub. We actively looking for reports and will continue to make changes based on your feedback.

Take a look at the .NET Core 3.0 Preview 1, Preview 2, Preview 3 and Preview 4 posts if you missed those. With this post, they describe the complete set of new capabilities that have been added so far with the .NET Core 3.0 release.

The post Announcing .NET Core 3.0 Preview 5 appeared first on .NET Blog.