![Ran Tech / Microsoft 3/9/17 Ran Tech / Microsoft 3/9/17]()

IoT is transforming every business on the planet, and that transformation is accelerating. Companies are harnessing billions of IoT devices to help them find valuable insights into critical parts of their business that were previously not connected—how customers are using their products, when to service assets before they break down, how to reduce energy consumption, how to optimize operations, and thousands of other user cases limited only by companies’ imagination.

Microsoft is leading in IoT because we’re passionate about simplifying IoT so any company can benefit from it quickly and securely.

Last year we announced a $5 billion commitment, and this year we highlighted the momentum we are seeing in the industry. This week, at our premier developer conference, Microsoft Build in Seattle, we’re thrilled to share our latest innovations that further simplify IoT and dramatically accelerate time to value for customers and partners.

Accelerating IoT

Developing a cloud-based IoT solution with Azure IoT has never been faster or more secure, yet we’re always looking for ways to make it easier. From working with customers and partners, we’ve seen an opportunity to accelerate on the device side.

Part of the challenge we see is the tight coupling between the software written on devices and the software that has to match it in the cloud. To illustrate this, it’s worth looking at a similar problem from the past and how it was solved.

Early versions of Windows faced a challenge in supporting a broad set of connected devices like keyboards and mice. Each device came with its own software, which had to be installed on Windows for the device to function. The software on the device and the software that had to be installed on Windows had a tight coupling, and this tight coupling made the development process slow and fragile for device makers.

Windows solved this with Plug and Play, which at its core was a capability model that devices could declare and present to Windows when they were connected. This capability model made it possible for thousands of different devices to connect to Windows and be used without any software having to be installed on Windows.

IoT Plug and Play

Late last week, we announced IoT Plug and Play, which is based on an open modeling language that allows IoT devices to declare their capabilities. That declaration, called a device capability model, is presented when IoT devices connect to cloud solutions like Azure IoT Central and partner solutions, which can then automatically understand the device and start interacting with it—all without writing any code.

IoT Plug and Play also enables our hardware partners to build IoT Plug and Play compatible devices, which can then be certified with our Azure Certified for IoT program and used by customers and partners right away. This approach works with devices running any operating system, be it Linux, Android, Azure Sphere OS, Windows IoT, RTOSs, and more. And all of our IoT Plug and Play support is open source as always.

Finally, Visual Studio Code will support modeling an IoT Plug and Play device capability model as well as generating IoT device software based on that model, which dramatically accelerates IoT device software development.

We’ll be demonstrating IoT Plug and Play at Build, and it will be available in preview this summer. To design IoT Plug and Play, we’ve worked with a large set of launch partners to ensure their hardware is certified ready:

![Build_IoT_replace Build_IoT_replace]()

Certified-ready devices are now published in the Azure IoT Device Catalog for the Preview, and while Azure IoT Central and Azure IoT Hub will be the first services integrated with IoT Plug and Play, we will add support for Azure Digital Twins and other solutions in the months to come. Watch this video to learn more about IoT Plug and Play and read this blog post for more details on IoT Plug and Play support in Azure IoT Central.

Announcing IoT Plug and Play connectivity partners

With increased options for low-power networking, the role of cellular technologies in IoT projects is on the rise. Today we’re introducing IoT Plug and Play connectivity partners. Deep integration between these partners’ technologies and Azure IoT simplifies customer deployments and adds new capabilities.

This week at Build, we are highlighting the first of these integrations, which leverages Trust Onboard from Twilio. The integration uses security features built into the SIM to automatically authenticate and connect to Azure, providing a secure means of uniquely identifying IoT devices that work with current manufacturing processes.

These are some of the many connectivity partners we are working with:

![Build_IoT_2 Build_IoT_2]()

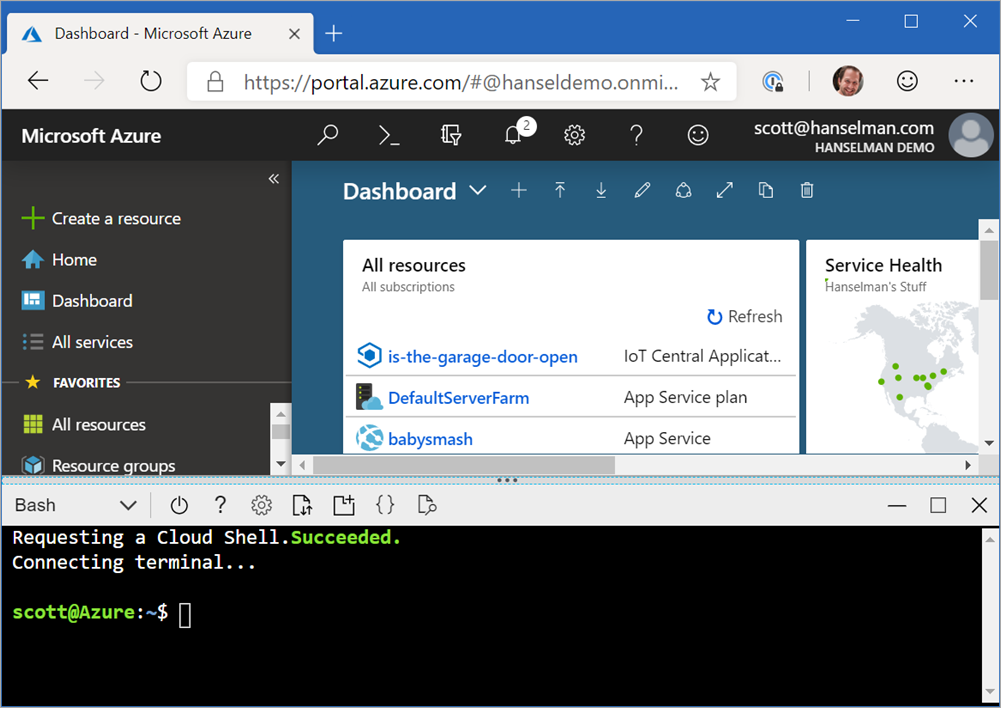

Making Azure IoT Central more powerful for developers

Last year we announced the general availability of Azure IoT Central, which enables customers and partners to provision an IoT application in 15 seconds, customize it in hours, and go to production the same day—all without writing code in the cloud.

While many customers build their IoT solutions directly on our Azure IoT platform services, we’re seeing an upswell in customers and partners that like the rapid application development Azure IoT Central provides. And, of course, Azure IoT Central is built on the same great Azure IoT platform services.

Today at Build, we’re announcing a set of new features that speak to how we’re enabling and simplifying Azure IoT Central for developers. We’ll show some of these innovations, such as new personalization features that make it easy for customers and partners to modify Azure IoT Central’s UI to conform with their own look and feel. In the Build keynote, we’ll show how Starbucks is using this personalization feature for their Azure IoT Central solution connected to Azure Sphere devices in their stores.

We’ll also demonstrate Azure IoT Central working with IoT Plug and Play to show how fast and easy this makes it to build an end-to-end IoT solution, with Microsoft still wearing the pager and keeping everything up and running so customers and partners can focus on the benefits IoT provides. Watch this video to learn more about Azure IoT Central announcements.

The growing Azure Sphere hardware ecosystem

Azure Sphere is Microsoft’s comprehensive solution for easily creating secured MCU-powered IoT devices. Azure Sphere is an integrated system that includes MCUs with built-in Microsoft security technology, an OS based on a custom Linux kernel, and a cloud-based security service. Azure Sphere delivers secured communications between device and cloud, device authentication and attestation, and ongoing OS and security updates. Azure Sphere provides robust defense-in-depth device security to limit the reach and impact of remote attacks and to renew device health through security updates.

At Build this week, we’ll showcase a new set of solutions such as hardware modules that speed up time to market for device makers, development kits that help organizations prototype quickly, and our new guardian modules.

Guardian modules are a new class of device built on Azure Sphere that protect brownfield equipment, mitigating risks and unlocking the benefits of IoT. They attach physically to brownfield equipment with no equipment redesign required, processing data and controlling devices without ever exposing vital operational equipment to the network. Through guardian modules, Azure Sphere secures brownfield devices, protects operational equipment from disabling attacks, simplifies device retrofit projects, and boosts equipment efficiency through over-the-air updates and IoT connectivity.

The seven modules and devkits on display at Build are:

- Avnet Guardian Module. Unlocks brownfield IoT by bringing Azure Sphere’s security to equipment previously deemed too critical to be connected. Available soon.

- Avnet MT3620 Starter Kit. Azure Sphere prototyping and development platform. Connectors allow easy expandability options with a range of MikroE Click and Grove modules. Available May 2019.

- Avnet Wi-Fi Module. Azure Sphere-based module designed for easy final product assembly. Simplifies quality assurance with stamp hole (castellated) pin design. Available June 2019.

- AI-Link WF-M620-RSC1 Wi-Fi Module. Designed for cost-sensitive applications. Simplifies quality assurance with stamp hole (castellated) pin design. Available now.

- SEEED MT3620 Development Board. Designed for comprehensive prototyping. Available expansion shields enable Ethernet connectivity and support for Grove modules. Available now.

- SEEED MT3620 Mini Development Board. Designed for size-constrained prototypes. Built on the AI-Link module for a quick path from prototype to commercialization. Available May 2019.

- USI Dual Band Wi-Fi + Bluetooth Combo Module. Supports BLE and Bluetooth 5 Mesh. Can also work as an NFC tag (for non-contact Bluetooth pairing and device provisioning). Available soon.

For those who want to learn more about the modules, you can find specs for each and links to more information on our Azure Sphere hardware ecosystem page.

See Azure Sphere in action at Build

Azure Sphere is also taking center stage at Build during Satya Nadella’s keynote this week. Microsoft customer and fellow Seattle-area company Starbucks will showcase how it is testing Azure IoT capabilities and guardian modules built on Azure Sphere within select equipment to enable partners and employees to better engage with customers, manage energy consumption and waste reduction, ensure beverage consistency, and facilitate predictive maintenance. The company’s solution will also be on display in the Starbucks Technology booth.

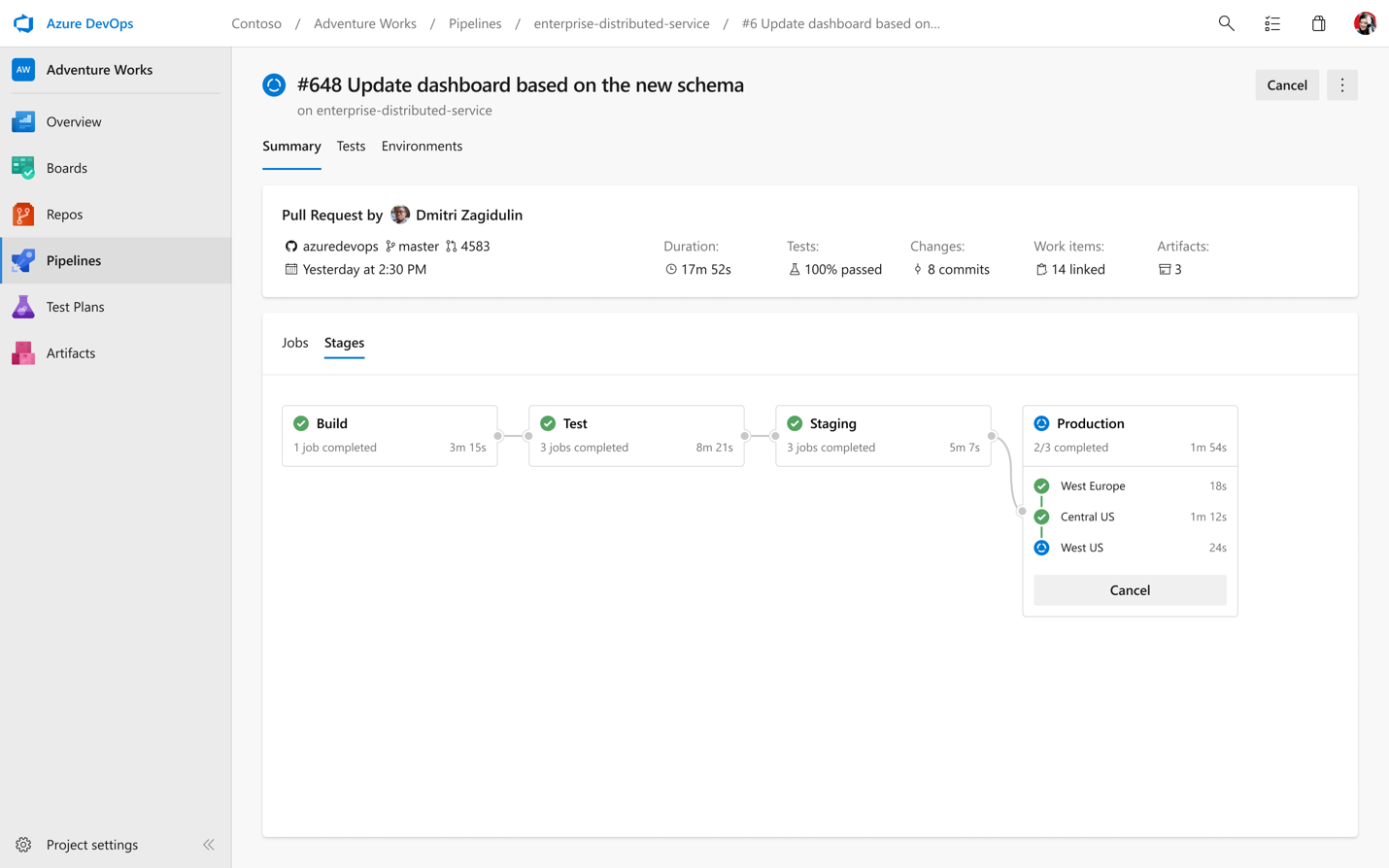

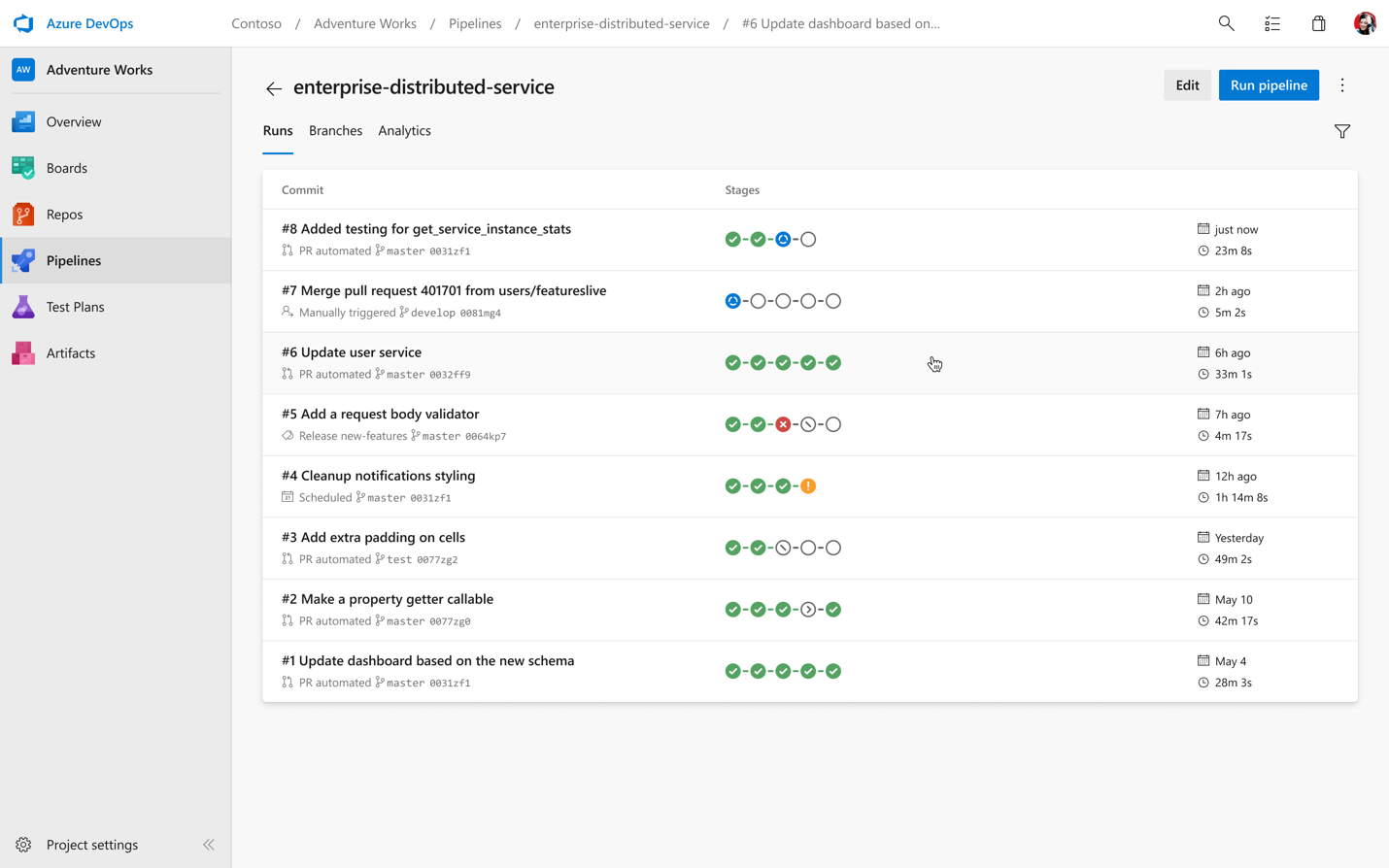

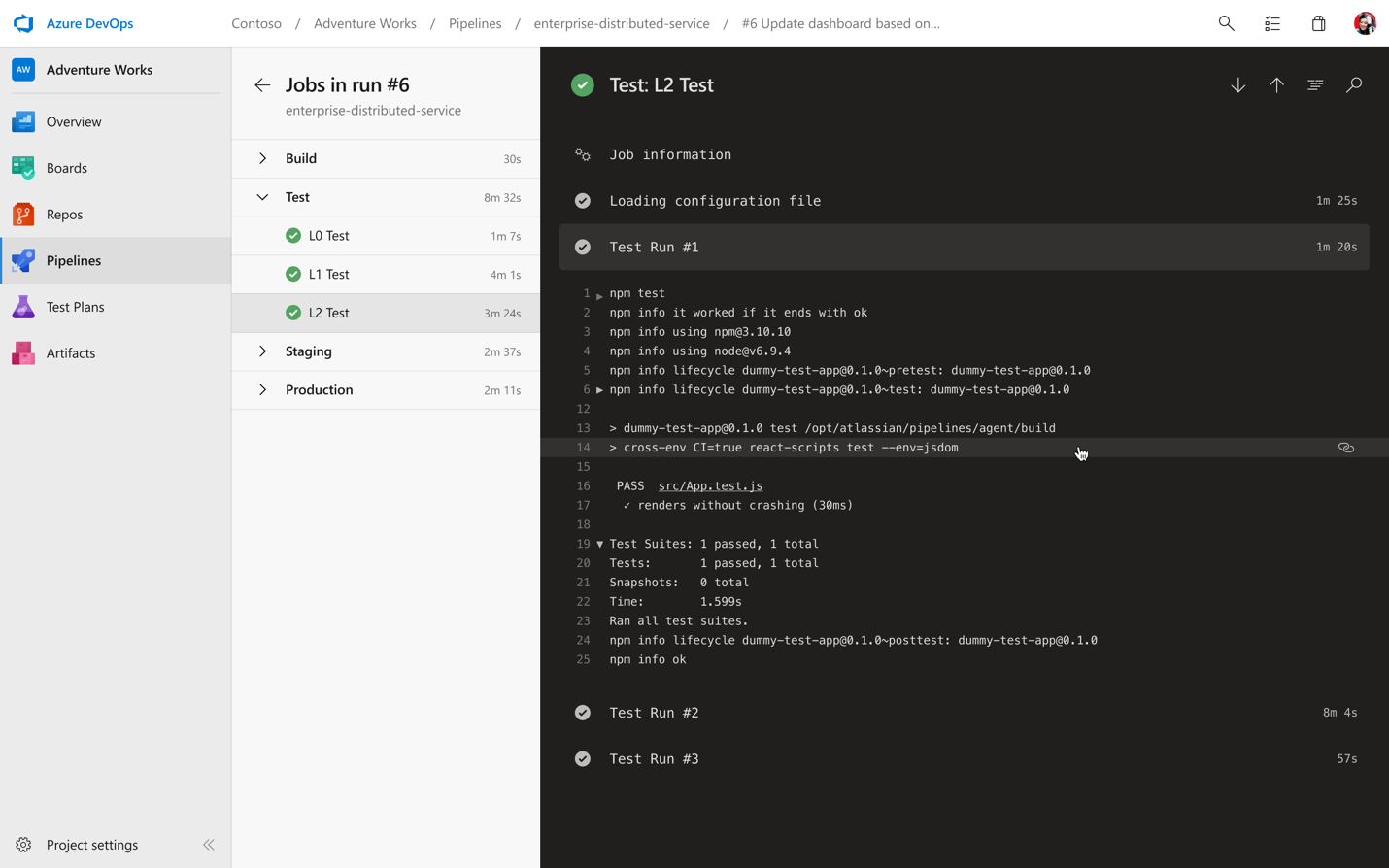

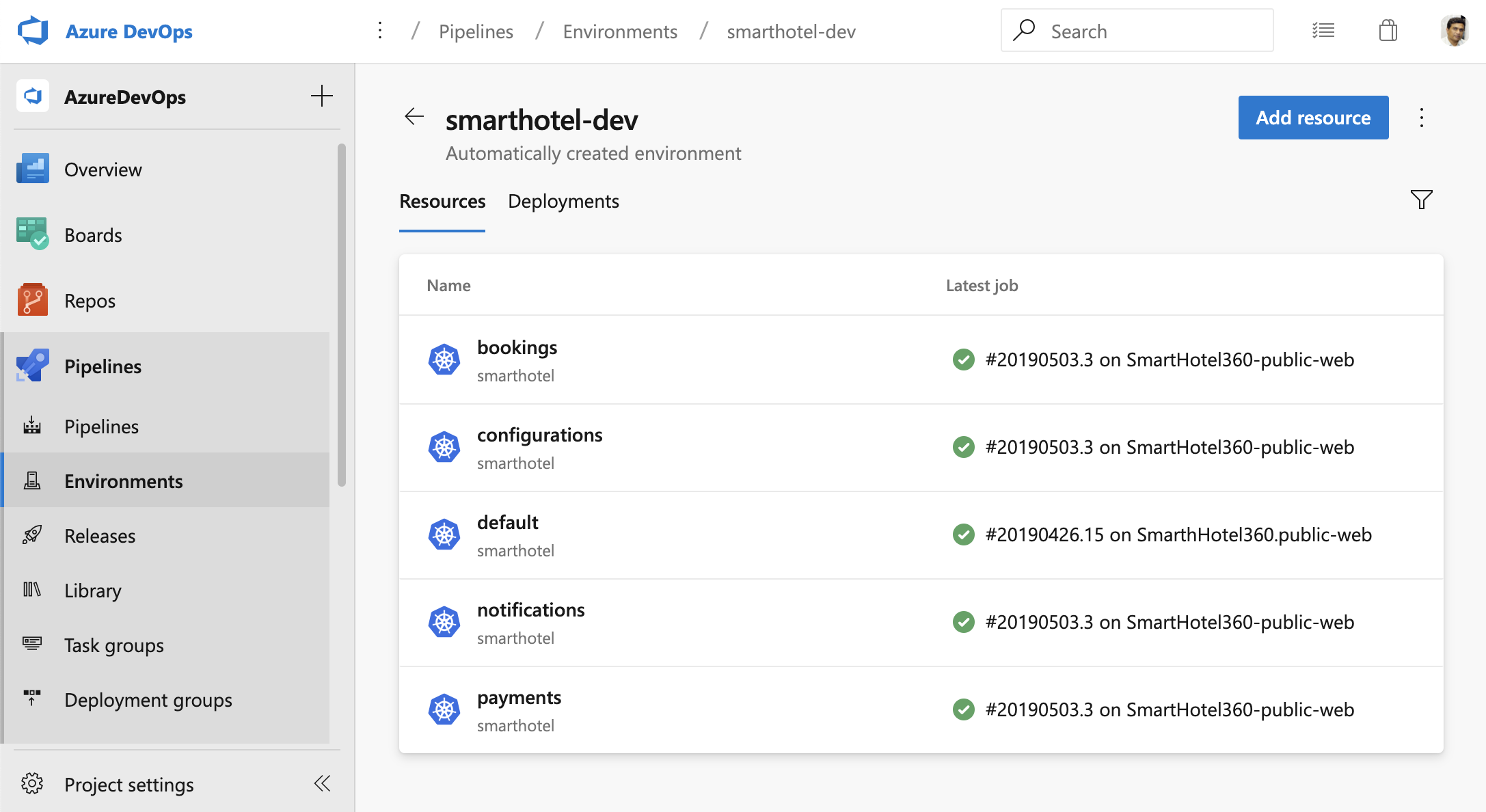

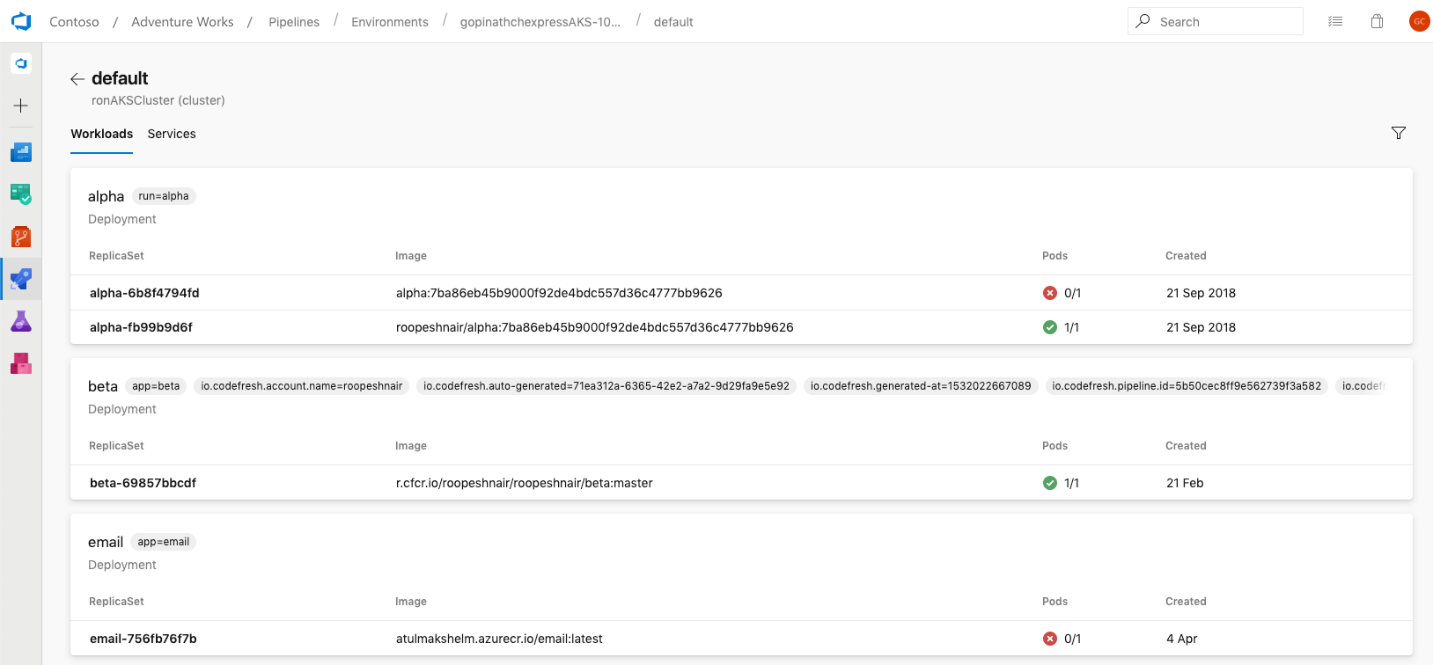

Announcing new Azure IoT Edge innovations

Today, we are announcing the public preview of Azure IoT Edge support for Kubernetes. This enables customers and partners to deploy an Azure IoT Edge workload to a Kubernetes cluster on premises. We’re seeing Azure IoT Edge workloads being used in business-critical systems at the edge. With this new integration, customers can use the feature-rich and resilient infrastructure layer that Kubernetes provides to run their Azure IoT Edge workloads, which are managed centrally and securely from Azure IoT Hub. Watch this video to learn more.

Additional IoT Edge announcements include:

- Preview of Azure IoT Edge support for Linux ARM64 (expected to be available in June 2019).

- General availability of IoT Edge extended offline support.

- General availability of IoT Edge support for Windows 10 IoT Enterprise x64.

- New provisioning capabilities using x.509 and SaS token.

- New built-in troubleshooting tooling.

A common use case for IoT Edge is transforming cameras into smart sensors to understand the physical world and enable a digital feedback loop: finding a missing product on a shelf, detecting damaged goods, etc. These examples require demanding computer vision algorithms to deliver consistent and reliable results, large-scale streaming capabilities, and specialized hardware for faster processing to provide real-time insights to businesses. At Build, we’re partnering with Lenovo and NVIDIA to simplify the development and deployment of these applications at scale. With NVIDIA DeepStream SDK for general-purpose streaming analytics, a single IoT Edge server running Lenovo hardware can process up to 70 channels of 1080P/30FPS H265 video streams to offer a cost-effective and faster time-to-market solution.

This summer, NVIDIA DeepStream SDK will be available from the IoT Edge marketplace. In addition, Lenovo’s new ThinkServer SE350 and GPU-powered “tiny” edge gateways will be certified for IoT Edge.

Announcing Mobility Services through Azure Maps

Today, an increasing number of apps built on Azure are designed to take advantage of location information in some way.

Last November, we announced a new platform partnership for Azure Maps with the world’s number-one transit service provider, Moovit. What we’re achieving through this partnership is similar to what we’ve built today with TomTom. At Build this week, we’re announcing Azure Maps Mobility Services, which will be a set of APIs that leverage Moovit’s APIs for building modern mobility solutions.

Through these new services, we’re able to integrate public transit, bike shares, scooter shares, and more to deliver transit route recommendations that allow customers to plan their routes leveraging the alternative modes of transportation, in order to optimize for travel time and minimize traffic congestion. Customers will also be able to access real-time intelligence on bike and scooter docking stations and car-share-vehicle availability, including present and expected availability and real-time transit stop arrivals.

Customers can use Azure Maps for IoT applications—or any application that uses geospatial or location data, such as apps for field service, logistics, manufacturing, and smart cities. Retail apps may integrate mobility intelligence to help customers access their stores or plan future store locations that optimize for transit accessibility. Field services apps may guide employees from one customer to another based on real-time service demand. City planners may use mobility intelligence to analyze the movement of occupants to plan their own mobility services, visualize new developments, and prioritize locations in the interests of occupants.

You can stay up to date about how Azure Maps is paving the way for the next generation of location services on the Azure Maps blog, and if you’re at Build this week, be sure to visit the Azure Maps booth to see our mobility and spatial operations services in action.

Simplifying development of robotic systems with Windows 10 IoT

Microsoft and Open Robotics have worked together to make the Robot Operating System (ROS) generally available for Windows 10 IoT. Additionally, we’re making it even easier to build ROS solutions in Visual Studio Code with upcoming support for Windows, debugging, and visualization to a community-supported Visual Studio Code extension. Read more about integration between Windows 10 IoT and ROS.

Come see us at Build

If you’re in Seattle this week, you can see some of these new technologies in our booth, and even play around with them at our IoT Hands-on Lab. I’ll also be hosting a session on our IoT Vision and Roadmap. Stop by to hear more details about these announcements and see some of these exciting new technologies in action.