One of the cardinal rules when attempting to improve software performance is to measure rather than guess. It is easy to fall into the trap of attempting a performance enhancement before root-causing the real performance bottleneck.

Our team at Microsoft has been working to improve the performance of Git to scale up to extremely large repositories, such as the Office and Windows repositories–the latter being the largest one on the planet today.

We added the Trace2 feature to Git to help us find and measure performance problems, to help us track our progress, and to help us direct our engineering efforts. We contributed Trace2 to core Git so that others may use it too.

Trace2 is a new feature available in Git 2.22, so update your version of Git if necessary and give it a try.

What is Trace2?

Trace2 is a logging framework built into Git. It can write to multiple logging targets, each with a unique format suitable for a different type of analysis. In this article I’ll use the performance format target to generate PERF format tracing data and I’ll show how we use it in our iterative development loop to understand and improve Git. In a later article I’ll focus on the event format target to generate EVENT format tracing data and show how we use it to aggregate performance across multiple commands and users and gain higher-level insight. We’ve found that both types of analysis are critical to removing the guess work and help us understand the big picture as we scale Git to help our users be productive.

Turn on Trace2 and Follow Along

In this article I’m going to do a deep dive on a few example Git commands, show the Trace2 output, and explain what it all means. It’ll be easier to understand how Trace2 works if you turn it on and follow along using one of your own repos.

I’m going to use Microsoft’s fork of Git which has features specifically for VFS for Git, so my output may differ slightly from yours.

Select a repository that you are familiar with. The bigger the better–after all we are talking about performance and scaling. It should also have an “https” remote that you can push to.

I’m going to “go really big” and use the Windows repository with help from VFS for Git.

Trace2 can write trace data to the console or to a log file. Sending it to the console can be confusing because the data is mixed with the actual command output. It is better to send it to a log file so you can study it in detail after the commands complete. Trace2 always appends new trace data to the bottom of the log file, so we can see the command history. And if multiple Git commands run concurrently, their output will be interleaved, so we can see the interaction.

Let’s turn on the performance format target and send the data to a file. And for space reasons, also turn on “brief” mode, which hides source filenames and line numbers.

You can enable Trace2 for Git commands in the current terminal window using environment variables.

export GIT_TRACE2_PERF_BRIEF=true

export GIT_TRACE2_PERF=/an/absolute/pathname/to/logfile

You’re all set now. Git commands will now append performance data to this log file.

Example 1 – Git Status

Let’s start with a simple example by running git status. We will step through the Trace2 logs line by line.

$ git status

On branch x

Your branch and 'origin/official/rsmaster' have diverged,

and have 3 and 242137 different commits each, respectively.

(use "git pull" to merge the remote branch into yours)

It took 26.02 seconds to compute the branch ahead/behind values.

You can use '--no-ahead-behind' to avoid this.

nothing to commit, working tree clean

Your log file should contain something similar to the following. The format of the output is described in PERF format, but it should be fairly easy to follow along, so I won’t try to repeat it here. I will point out key points as we go along.

Note that Git will append Trace2 messages from the current command to the end of the log file, so your log file may have output from previous commands if you have been experimenting on your own.

For space and privacy reasons, I’ll omit or shorten some of the Trace2 messages, so your output may differ from this a little. And for clarity, I will add blank lines for grouping purposes.

Process Startup

d0 | version | | | | 2.22.0.vfs.1.1

d0 | start | 0.007057 | | | 'C:vbingit.exe' status

The “start” message contains the full command line.

Column 3 contains the elapsed time since the start of the program.

d0 | child_start | 0.031535 | | | [ch0] class:hook hook:pre-command argv: ...

d0 | child_exit | 0.232245 | 0.200710 | | [ch0] pid:20112 code:0

“child_*” messages mark the boundaries of a spawned child process. This child happens to be of type “hook”.

Column 4, when present, contains the relative time since the corresponding start or enter message.

The VFS for Git-aware version of Git contains a pre-command hook that is invoked at the start of each Git command. It started at t+0.031535 seconds. The child process finished at t+0.232245 seconds. Since hooks are run synchronously, we know that the git status process waited 0.200710 seconds for the hook to complete.

d0 | cmd_name | | | | status (status)

This message prints the canonical name of the command and the command’s ancestry in the last column.

The canonical name is status. Normally, this is just the value of the first non-option token on the command line.

For top-level Git commands there are no parent Git commands, so the ancestry is reported as just status.

The ancestry will be more important later when we talk about child Git commands spawned by other Git commands. In these instances the ancestry contains the hierarchy of Git commands spawning this command. We’ll see examples of this later.

Reading the Index

d0 | region_enter | 0.232774 | | index | label:do_read_index .git/index

d0 | data | 0.518577 | 0.285803 | index | ..read/version:4

d0 | data | 0.518636 | 0.285862 | index | ..read/cache_nr:3183722

d0 | region_leave | 0.518655 | 0.285881 | index | label:do_read_index .git/index

“region_*” messages mark the boundaries of an “interesting” computation, such as a function call, a loop, or a just span of code.

Column 5, when present, contains a “category” field. This is an informal way of grouping a region or data message to a section of the code. These messages are all associated with the index.

The last column contains a label name for the region, in this case do_read_index. We know from looking at the source code that this is the name of the function that reads the index into memory. This column can also include region-specific information. In this case it is the relative pathname of the index file.

“data” messages report the values of “interesting” variables in the last column. The index was in V4 format. The index contained 3,183,722 cache-entries (files under version control).

Also, the last column of “data” and “region” messages contained within an outer region are prefixed with “..” to indicate the nesting level.

Git started reading the index at t+0.232774 and finished at t+0.518655. We spent 0.285881 seconds reading the index.

As you can see the Windows repo is extremely large with ~3.2 million files under version control. It takes Git about a third of a second just to read and parse the index. And this must happen before any actual work can start.

Applying VFS Hints

d0 | child_start | 0.518814 | | | [ch1] argv: .git/hooks/virtual-filesystem 1

d0 | child_exit | 0.534578 | 0.015764 | | [ch1] pid:28012 code:0

d0 | data | 0.964700 | 0.964700 | vfs | apply/tracked:44

d0 | data | 0.964748 | 0.964748 | vfs | apply/vfs_rows:47

d0 | data | 0.964765 | 0.964765 | vfs | apply/vfs_dirs:2

A child process was created to run the VFS for Git virtual-filesystem hook. This hook is critical to making git status fast in a virtualized repository, as VFS for Git is watching file contents change and Git can trust the hook’s response instead of walking the filesystem directly. Our Trace2 logs include some statistics about the hook’s response. For example, VFS for Git knows that only 44 files have been opened for read+write, such as in an editor.

However, we see a gap of about ~0.43 seconds after the hook finished at t+0.534578 and before the first data message at t+0.964700. In the future we might want to use Trace2 or a profiler to experiment and track down what is happening during that gap.

Status Computations

d0 | region_enter | 1.171908 | | status | label:worktrees

d0 | region_leave | 1.242157 | 0.070249 | status | label:worktrees

Phase 1 (“label:worktrees”) of the status computation took 0.070249 seconds.

d0 | region_enter | 1.242215 | | status | label:index

d0 | data | 1.243732 | 0.001517 | midx | ..load/num_packs:2592

d0 | data | 1.243757 | 0.001542 | midx | ..load/num_objects:47953162

d0 | region_enter | 1.297172 | | exp | ..label:traverse_trees

d0 | region_leave | 1.297310 | 0.000138 | exp | ..label:traverse_trees

d0 | region_leave | 1.345756 | 0.103541 | status | label:index

Phase 2 (“label:index”) of the status computation took 0.103541 seconds. Within this region, a nested region (“label:traverse_trees”) took 0.000138 seconds.

We know there are 2,592 packfiles containing ~48 million objects.

d0 | region_enter | 1.345811 | | status | label:untracked

d0 | region_leave | 1.347070 | 0.001259 | status | label:untracked

Phase 3 (“label:untracked”) of the status computation took 0.001259 seconds. This phase was very fast because it only had to inspect the 44 paths identified by the virtual-filesystem hook.

d0 | data | 1.398723 | 1.398723 | status | count/changed:0

d0 | data | 1.398766 | 1.398766 | status | count/untracked:0

d0 | data | 1.398782 | 1.398782 | status | count/ignored:0

Status was clean. We have the result ready to print at t+1.398782 seconds.

Printing Status Results

d0 | region_enter | 1.398833 | | status | label:print

d0 | region_leave | 27.418896 | 26.020063 | status | label:print

However, it took 26.020063 seconds to print the status results. More on this in a minute.

Process Shutdown

d0 | child_start | 27.419178 | | | [ch2] class:hook hook:post-command argv: ...

d0 | child_exit | 27.619002 | 0.199824 | | [ch2] pid:20576 code:0

VFS for Git also requires a post-command hook.

d0 | atexit | 27.619494 | | | code:0

Status completed with exit code 0 at t+27.619494 seconds.

The Status Ahead/Behind Problem

As you can see, we have a pretty good idea of where our time was spent in the command. We spent ~26 seconds in the “label:print” region. That seems like a very long time to print “nothing to commit, working tree clean”.

We could use a profiler tool or we could add some nested regions to track it down. I’ll leave that as an exercise for you. I did that exercise a few years ago and found that the time was spent computing the exact ahead/behind numbers. Status reported that my branch is behind upstream by 242,137 commits and the only way to know that is to walk the commit graph and search for the relationship between HEAD and the upstream branch.

At this point you’re probably saying something about this being a contrived example and that I picked an ancient commit as the basis for my x topic branch. Why else would I have a branch nearly 250K commits behind? The Windows repo is huge and master moves very fast. The basis for my topic branch is less than 3 months old! This is easily within the realm of a topic branch under development and review. This is another scale vector we have to contend with.

This led me to add the --no-ahead-behind option to git status in Git 2.17. Let’s give that a try and see what happens.

$ git status --no-ahead-behind

On branch x

Your branch and 'origin/official/rsmaster' refer to different commits.

(use "git status --ahead-behind" for details)

nothing to commit, working tree clean

When this option is enabled, status only reports that the 2 branches refer to different commits. It does not report ahead/behind counts or whether the branches have diverged or are fast-forwardable.

I’ll skip over the similar parts of the trace output and just show the bottom portion.

d0 | data | 1.474304 | 1.474304 | status | count/changed:0

d0 | data | 1.474348 | 1.474348 | status | count/untracked:0

d0 | data | 1.474376 | 1.474376 | status | count/ignored:0

d0 | region_enter | 1.474390 | | status | label:print

d0 | region_leave | 1.475869 | 0.001479 | status | label:print

d0 | atexit | 1.663404 | | | code:0

Git printed the status results in 0.001479 seconds in this run by avoiding the ahead/behind computation. Total run time for status was 1.663404 seconds.

So What’s the Big Deal?

At this point you may be saying “So, what’s the big deal? I could get that level of detail from a profiler.”

Profilers are great, but have their limitations.

-

Profilers typically only work on a single process. Git processes frequently invoke child Git processes and shell scripts. This makes it extremely difficult to capture a complete view of an operation since we need to somehow merge the profile data from all of the processes spawned by a command.

-

A top-level Git command may spawn numerous child Git commands and shell scripts. These shell scripts may also spawn numerous child Git commands. These child processes usually require a complex setup with environment variables and stdin/stdout plumbing. This makes it difficult to capture profile data on an isolated child Git process.

-

Profiler dumps are typically very large, since they contain stack trace and symbol data. They are platform-specific and very version/build sensitive, so they don’t archive well. They are great for exploring performance with an interactive tool, like Visual Studio, but they are less so when comparing multiple runs or worse, runs from other users. It is often better to have simple logs of the commands and compare them like we did above.

-

Dumps are usually based on process sampling and typically have function-level granularity. Trace2 messages can be generated at precise points in the code with whatever granularity is desired. This allows us to focus our attention on “interesting” parts of the code.

-

It is difficult to extract per-thread details. Trace2 messages identify the calling thread and have thread-specific regions and timestamps. This helps us understand and fine-tune our multi-threading efforts.

-

Profilers give us the time spent in a section of code, but they don’t let us gather run-time data as part of the output, such as the number of packfiles, the size of the index, or the number of changed files. Trace2 data messages let us include this information with the timing information. This helps us see when there are data-dependent performance problems, such as in the ahead/behind example.

-

Profilers don’t allow us to do higher-level data aggregations, such as averaging times by region across multiple runs or across multiple users. Trace2 data can be post-processed for aggregation and further study. More on this in the next article.

-

Trace2 is cross-platform so we can do the same analysis on all platforms and we can compare numbers between platforms.

As you can see, Trace2 gives us the ability to collect exactly the performance data that we need, archive it efficiently, and analyze it in a variety of ways. Profilers can help us in our work, but they have their limitations.

Example 2 – Git Push

Let’s look at a more complex example by running git push.

In a previous article and in a Git Merge 2019 presentation we described how we improved the performance of push with a new sparse push algorithm. In both we hinted that we used Trace2 to find and measure the problem and to confirm that we had actually improved it.

Let’s dive in and see how Trace2 made this possible and let you verify our results.

Create a Commit

First, let’s make a change that we can push. Create and checkout a test branch. Modify a single file (preferably someplace deep in the worktree). And commit it.

This would be a good time to look at the log file and see the trace output written by those commands, but I’ll leave that for you.

You may want to delete the log file before starting the push, so that later it will only contain the push logging. This may make it easier to follow along.

Push with the Original Algorithm

Next, let’s push your test branch with the original algorithm.

git -c pack.useSparse=false push origin test

Push is a complex command and creates a cascade of at least 6 processes. Trace2 interleaves the output from each of the Git commands as it happens.

The first column in each line is the Git process depth.

Push Process Startup

d0 | version | | | | 2.22.0.vfs.1.1

d0 | start | 0.012840 | | | 'C:vbingit.exe' -c pack.useSparse=false push

d0 | child_start | 0.041627 | | | [ch0] class:hook hook:pre-command argv: ...

d0 | child_exit | 0.326167 | 0.284540 | | [ch0] pid:20752 code:0

d0 | cmd_name | | | | push (push)

The top-level “d0:git push” starts up. It reports its canonical name is push and since it is a top-level command, its ancestry is just push.

Push Spawns Remote-Https which Spawns Git-Remote-Https

d0 | child_start | 0.326698 | | | [ch1] class:remote-https argv: git remote-https ...

“d0:git push” spawns “d1:git remote-https”.

d1 | version | | | | 2.22.0.vfs.1.1

d1 | start | 0.007434 | | | git remote-https origin https://my.server/os

d1 | cmd_name | | | | _run_dashed_ (push/_run_dashed_)

“d1:git remote-https” starts. It reports that its canonical name is _run_dashed_ and its ancestry is push/_run_dashed_.

The term _run_dashed_ is used to indicate that the command is going to hand off to a dashed form of the command. In this case, git remote-https is invoking git-remote-https rather than actually doing the work itself.

d1 | child_start | 0.031055 | | | [ch0] argv: git-remote-https ...

“d1:git remote-https” spawns “d2:git-remote-https”.

d2 | version | | | | 2.22.0.vfs.1.1

d2 | start | 0.013885 | | | git-remote-https origin https://my.server/os

d2 | cmd_name | | | | remote-curl (push/_run_dashed_/remote-curl)

“d2:git-remote-https” reports its canonical name is remote-curl. The ancestry is a hierarchy of the open Git commands (including this one).

Push is Working while Waiting

d0 | data | 0.859083 | 0.859083 | midx | load/num_packs:2894

d0 | data | 0.859112 | 0.859112 | midx | load/num_objects:47641314

The first column indicates that these data messages are from “d0”. This means that the top-level “d0:git push” is doing something while it waits for “d1:git remote-https” to complete. This can happen if Git spawns a background child process and doesn’t immediately wait for it to complete. We’re going to ignore it.

Getting My Credentials

d2 | child_start | 0.639533 | | | [ch0] argv: 'git credential-manager get'

“d2:git-remote-https” spawns “d3:git credential-manager” to get my cached credentials.

d3 | version | | | | 2.22.0.vfs.1.1

d3 | start | 0.007614 | | | 'C:...git.exe' credential-manager get

d3 | cmd_name | | | | _run_dashed_

(push/_run_dashed_/remote-curl/_run_dashed_)

“d3:git credential-manager” also reports _run_dashed_ because it also going to defer to its dashed peer.

d3 | child_start | 0.039516 | | | [ch0] argv: git-credential-manager get

“d3:git credential-manager” spawns “git-credential-manager”, but this is a third-party application so we do not get any Trace2 data from it.

d3 | child_exit | 0.495332 | 0.455816 | | [ch0] pid:24748 code:0

d3 | atexit | 0.495867 | | | code:0

The “d3:git credential-manager” process reported its “atexit” time as 0.495867 seconds. That is the “internal” duration of the command (within main()).

d2 | child_exit | 1.436891 | 0.797358 | | [ch0] pid:10412 code:0

The “d2:git-remote-https” process reported the “child_exit” time as 0.797358 seconds. That is the “external” duration of the child and includes the process creation and cleanup overhead, so it is a little longer than the child’s “atexit” time.

“d2:git-remote-https” now has the credentials.

Storing My Credentials

d2 | child_start | 1.737848 | | | [ch1] argv: 'git credential-manager store'

d3 | version | | | | 2.22.0.vfs.1.1

d3 | start | 0.007661 | | | 'C:...git.exe' credential-manager store

d3 | cmd_name | | | | _run_dashed_

(push/_run_dashed_/remote-curl/_run_dashed_)

d3 | child_start | 0.038594 | | | [ch0] argv: git-credential-manager store

d3 | child_exit | 0.270066 | 0.231472 | | [ch0] pid:21440 code:0

d3 | atexit | 0.270569 | | | code:0

d2 | child_exit | 2.308430 | 0.570582 | | [ch1] pid:25732 code:0

“d2:git-remote-https” repeats the credential-manager sequence again to store/update the credentials.

Running Send-Pack and Pack-Objects

d2 | child_start | 2.315457 | | | [ch2] argv: git send-pack ...

“d2:git-remote-https” spawns “d3:git send-pack”.

d3 | version | | | | 2.22.0.vfs.1.1

d3 | start | 0.007556 | | | git send-pack --stateless-rpc ...

d3 | cmd_name | | | | send-pack

(push/_run_dashed_/remote-curl/send-pack)

d3 | child_start | 0.050237 | | | [ch0] argv: git pack-objects ...

“d3:git send-pack” spawns “d4:git pack-objects”.

d4 | version | | | | 2.22.0.vfs.1.1

d4 | start | 0.007636 | | | git pack-objects --all-progress-implied ...

d4 | cmd_name | | | | pack-objects

(push/_run_dashed_/remote-curl/send-pack/pack-objects)

“d4:git pack-objects” reports its canonical name is pack-objects and its ancestry is push/_run_dashed_/remote-curl/send-pack/pack-objects.

d4 | region_enter | 0.039389 | | pack-objects | label:enumerate-objects

d4 | region_leave | 14.420960 | 14.381571 | pack-objects | label:enumerate-objects

“d4:git pack-objects” spent 14.381571 seconds enumerating objects. More on this in a minute.

d4 | region_enter | 14.421012 | | pack-objects | label:prepare-pack

d4 | region_leave | 14.431710 | 0.010698 | pack-objects | label:prepare-pack

d4 | region_enter | 14.431754 | | pack-objects | label:write-pack-file

d4 | data | 14.433644 | 0.001890 | pack-objects | ..write_pack_file/wrote:9

d4 | region_leave | 14.433679 | 0.001925 | pack-objects | label:write-pack-file

d4 | atexit | 14.434176 | | | code:0

“d4:git pack-objects” wrote 9 objects in a packfile to stdout.

d3 | child_exit | 14.924402 | 14.874165 | | [ch0] pid:24256 code:0

d3 | atexit | 15.610328 | | | code:0

Hidden in here somewhere, “d3:git send-pack” sent the packfile to the server. I’m not going to try to isolate the actual network time.

Unwinding Everything

d2 | child_exit | 18.167133 | 15.851676 | | [ch2] pid:19960 code:0

d2 | atexit | 18.176882 | | | code:0

d1 | child_exit | 18.419940 | 18.388885 | | [ch0] pid:13484 code:0

d1 | atexit | 18.420427 | | | code:0

d0 | child_exit | 18.988088 | 18.661390 | | [ch1] pid:16356 code:0

The child processes all exit and control returns to the top-level “d0:git push”.

d0 | child_start | 18.988210 | | | [ch2] class:hook hook:post-command argv: ...

d0 | child_exit | 19.186139 | 0.197929 | | [ch2] pid:2252 code:0

“d0:git push” runs the VFS for Git post-command hook.

d0 | atexit | 19.186581 | | | code:0

And we’re done. The total push time was 19.186581 seconds. Clearly, enumerate-objects is the problem, since it consumes 14.4 of the 19.2 seconds.

Push With the New Algorithm

Now let’s try again with the new algorithm. Make another change to that same file, commit and push.

git -c pack.useSparse=true push origin test

For space reasons I’m only going to show the important differences for this push.

d4 | version | | | | 2.22.0.vfs.1.1

d4 | start | 0.007520 | | | git pack-objects --all-progress-implied ...

d4 | cmd_name | | | | pack-objects

(push/_run_dashed_/remote-curl/send-pack/pack-objects)

d4 | region_enter | 0.039500 | | pack-objects | label:enumerate-objects

d4 | region_leave | 0.590796 | 0.551296 | pack-objects | label:enumerate-objects

With the new algorithm enumerate-objects took 0.551296 seconds.

d4 | region_enter | 0.590900 | | pack-objects | label:prepare-pack

d4 | region_leave | 0.601070 | 0.010170 | pack-objects | label:prepare-pack

d4 | region_enter | 0.601118 | | pack-objects | label:write-pack-file

d4 | data | 0.602861 | 0.001743 | pack-objects | ..write_pack_file/wrote:9

d4 | region_leave | 0.602896 | 0.001778 | pack-objects | label:write-pack-file

d4 | atexit | 0.603413 | | | code:0

Like before “d4:git pack-objects” wrote 9 objects in a packfile to stdout.

d0 | atexit | 4.933607 | | | code:0

And the entire push only took 4.933607 seconds. That’s much better!

Using Trace2 for Iterative Development

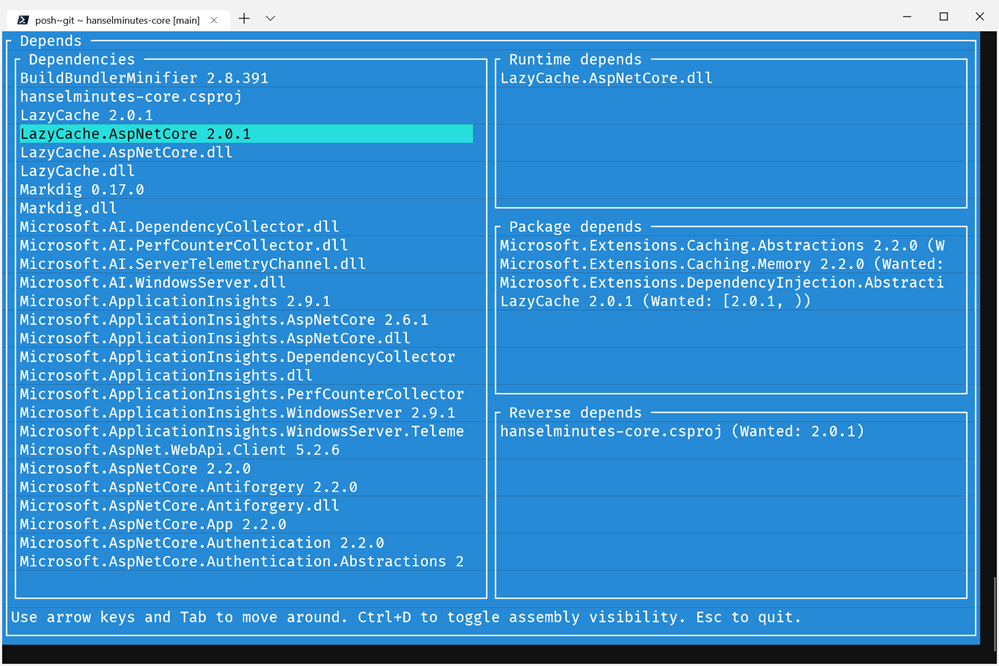

![]()

Trace2 defines the basic performance tracing framework. And allows us to see where time is being spent and gives us a feeling of the overall time flow in a command. It does this by tracking process times, child process relationships, thread usage, and regions of interest.

Trace2 lets us explore and experiment during our iterative development loop. For example, we can trivially add new regions and data messages to help further our understanding of Git’s internal workings. And we can use Trace2 in conjunction with traditional profilers to help focus our investigations.

The git push example shows how we were able to track down and measure the performance problem using just the process and child process messages. We initially guessed it would be a network or a packfile compression problem. We weren’t even looking at enumerate-objects. But after running some experiments and measuring the activity in each process, we found a complex set of parent/child relationships and that the problem is actually in “d4:git pack-objects” — four nested processes removed from the “d0:git push” command we launched.

With that information in hand, we were then able to dig deeper and use custom Trace2 regions and the profiler on pack-objects to help us understand what was happening and why. We then deployed an experimental build including the custom Trace2 regions to some of our Windows developers to confirm they were experiencing the same bottleneck that we found.

Consequently, we were able to design a more efficient algorithm and then use Trace2 to confirm that we actually fixed the problem. We then deployed another experimental build to some of our Windows developers to confirm that it fixed their problem too.

Another example where we used Trace2 to measure performance is the commit-graph feature. In a previous post we described generation numbers as a tool to speed up commit walks. After the algorithms were implemented in Git, some repositories had data shapes that actually led to worse performance! After digging into these examples, multiple alternatives were presented to replace generation numbers. While we could use timing data to compare runs, that data is very noisy. Instead, we used Trace2 to report the exact number of commits walked by each algorithm for each option. These numbers were predictable and related directly to the algorithm’s runtime.

These are but a few examples of how we have used Trace2 and the “performance format target” in our iterative development process to address performance problems as we scale Git.

Final Remarks

The Trace2 performance target is a great tool for the types of analysis that I’ve described in this article. But it does have a limitation. We have to already know what needs to be studied (e.g. “Why is push slow?”).

To best help our enterprise Windows and Office developers we need to understand their pain-points: the commands that they find slow; the conditions underwhich those commands are especially slow; and what overall effect this is having on their total productivity. Then with those measurements in hand, we can prioritize our engineering efforts to improve Git for maximum benefit. For that we need to collect and aggregate some telemetry data on Git usage.

In my next article I’ll talk about using the Trace2 “event format target” to generate a custom telemetry stream for Git. I’ll talk about the usual telemetry metrics, like average and weighted-average command durations. And I’ll talk about custom metrics, like averages for the regions and data fields we identified earlier. This will give us the data to prioritize our engineering efforts and to verify at-scale the optimizations we make.

The post A Deep Dive into Git Performance using Trace2 appeared first on Azure DevOps Blog.