In the coming weeks, we will update the

Bing Webmaster Guidelines to make them clearer and more transparent to the SEO community. This major update will be accompanied by blog posts that share more details and context around some specific violations.

In the first article of this series, we are introducing a new penalty to address “inorganic site structure” violations. This penalty will apply to malicious attempts to obfuscate website boundaries, which covers some old attack vectors (such as doorways) and new ones (such as subdomain leasing).

What is a website anyway?

One of the most fascinating aspects of building a search engine is developing the infrastructure that gives us a deep understanding of the structure of the web. We’re talking trillions and trillions of URLs, connected with one another by hyperlinks.

The task is herculean, but fortunately we can use some logical grouping of these URLs to make the problem more manageable – and understandable by us, mere humans! The most important of these groupings is the concept of a “website”.

We all have some intuition of what a website is. For reference,

Wikipedia defines a website as “a collection of related network web resources, such as web pages, multimedia content, which are typically identified with a common domain name.”

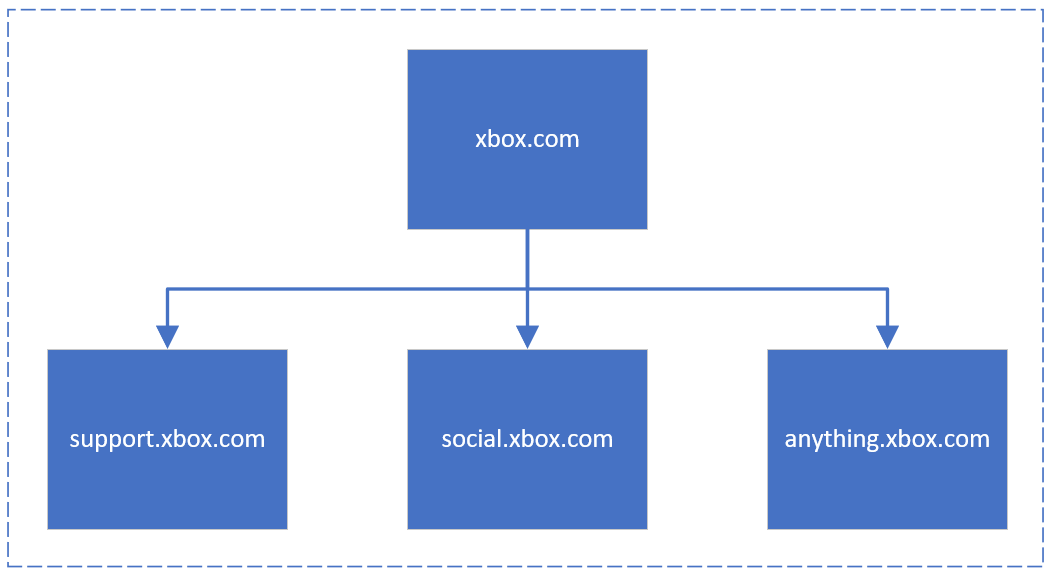

It is indeed very typical that the boundary of a website is the domain name. For example, everything that lives under the xbox.com domain name is a single website.

![Fig. 1 – Everything under the same domain name is part of the same website.]() Fig. 1 – Everything under the same domain name is part of the same website.

Fig. 1 – Everything under the same domain name is part of the same website.

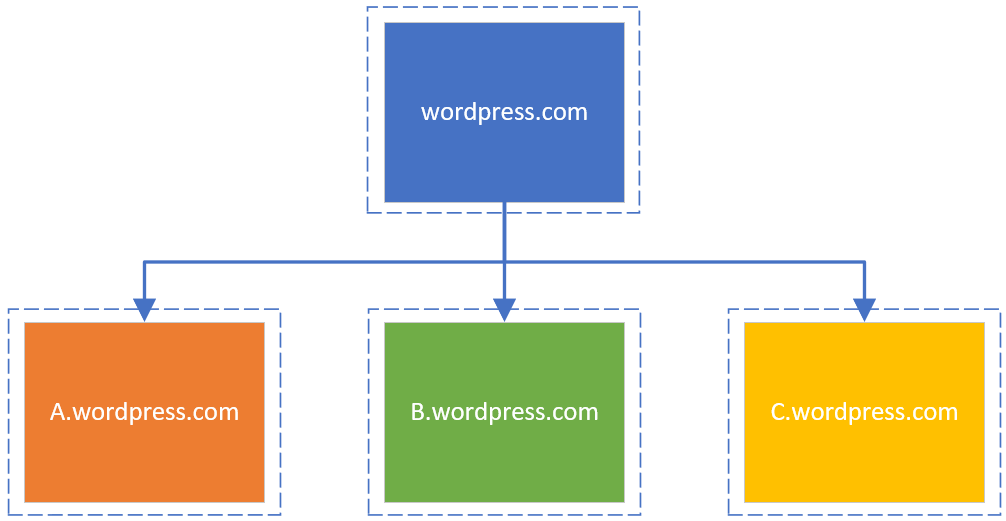

A common alternative is the case of a hosting service where each subdomain is its own website, such as wordpress.com or blogspot.com. And there are some (less common) cases where each subdirectory is its own website, similar to what GeoCities was offering in the late 90s.

![Fig. 2 – Each subdomain is its own separate website.]() Fig. 2 – Each subdomain is its own separate website.

Fig. 2 – Each subdomain is its own separate website.

Why does it matter?

Some fundamental algorithms used by search engines differentiate between URLs that belong to the same website and URLs that don’t. For example, it is well known that most algorithms based on the link graph propagate link value differently whether a link is internal (same site) or external (cross-site).

These algorithms also use site-level signals (among many others) to infer the relevance and quality of content. That’s why pages on a very trustworthy, high-quality website tend to rank more reliably and higher than others, even if such pages are new and didn’t accumulate a lot of page-level signals.

When things go wrong

Stating the obvious, we can’t have people manually review billions of domains in order to assess what is a website. To solve this problem, like many of the other problems we need to solve at the scale of the web, we developed sophisticated algorithms to determine website boundaries.

The algorithm gets it right most of the time. Occasionally it gets it wrong, either conflating two websites into one or viewing a single website as two different ones. And sometimes there’s no obvious answer, even for humans! For example, if your business operates in both the US and the UK, with content hosted on two separate domains (respectively a .com domain and a .co.uk domain), you can be seen as running either one or two websites depending on how independent your US and UK entities are, how much content is shared across the two domains, how much they link to each other, etc.

However, when we reviewed sample cases where the algorithm got it wrong, we noticed that the most common root cause was that the website owner actively tried to misrepresent the website boundary.

It can be indeed very tempting to try to fool the algorithm. If your internal links are viewed as external, you can get a nice rank boost. And if you can propagate some of the site-level signals to pages that don’t technically belong to your website, these pages can get an unfair advantage.

Making things right

In order to maintain the quality of our search results while being transparent to the SEO community, we are introducing new penalties to address “inorganic site structure”. In short, creating a website structure that actively misrepresents your website boundaries is going to be considered a violation of the

Bing Webmaster Guidelines and will potentially result in a penalty.

Some “inorganic site structure” violations were already covered by other categories, whereas some of them were not. To understand better what is active misrepresentation, let’s look at three examples.

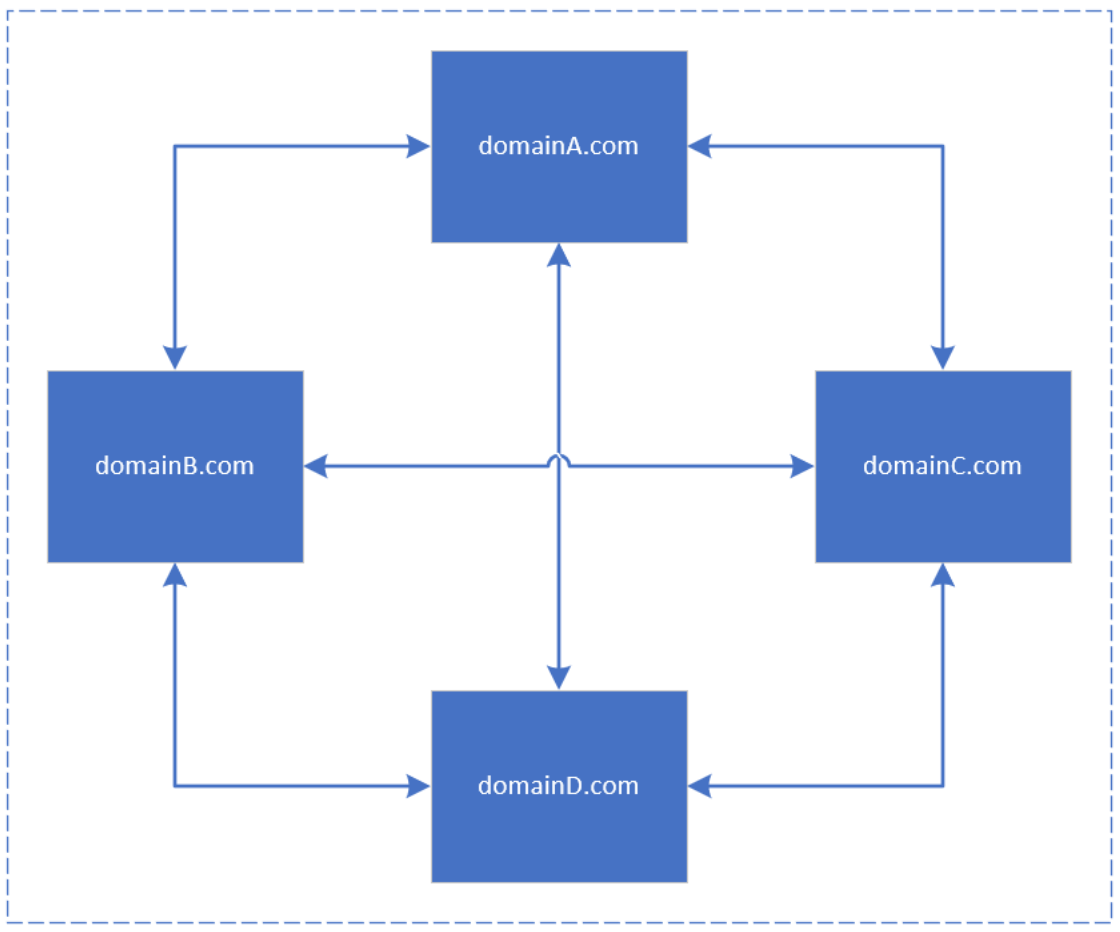

PBNs and other link networks

While not all link networks misrepresent website boundaries, there are many cases where a single website is artificially split across many different domains, all cross-linking to one another, for the obvious purpose of rank boosting. This is particularly true of PBNs (

private blog networks).

![Fig. 3 – All these domains are effectively the same website.]() Fig. 3 – All these domains are effectively the same website.

Fig. 3 – All these domains are effectively the same website.

This kind of behavior is already in violation of our link policy. Going forward, it will be also in violation of our “inorganic site structure” policy and may receive additional penalties.

Doorways and duplicate content

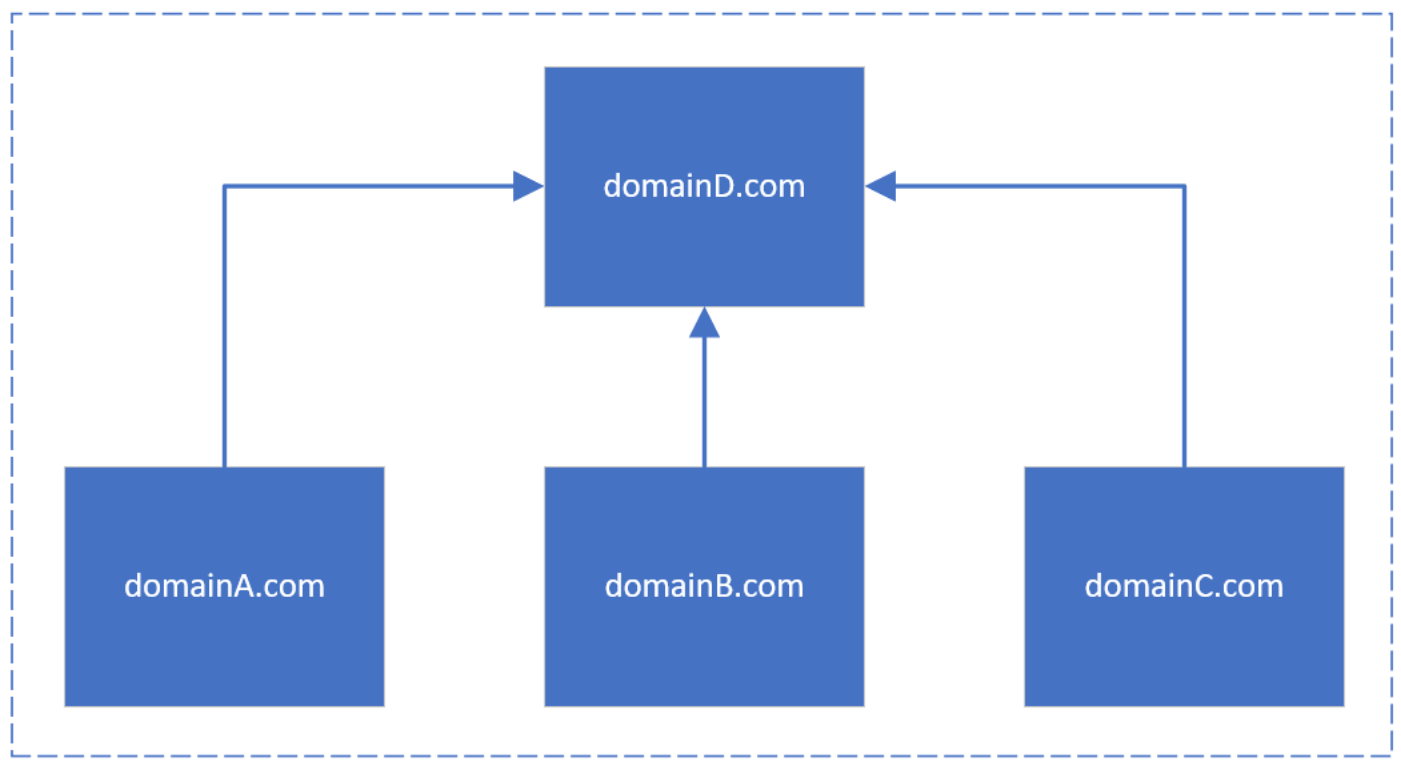

Doorways are pages that are overly optimized for specific search queries, but which only redirect or point users to a different destination. The typical situation is someone spinning up many different sites hosted under different domain names, each targeting its own set of search queries but all redirecting to the same destination or hosting the same content.

![Fig. 4 – All these domains are effectively the same website (again).]() Fig. 4 – All these domains are effectively the same website (again).

Fig. 4 – All these domains are effectively the same website (again).

Again, this kind of behavior is already in violation of our webmaster guidelines. In addition, it is also a clear-cut example of “inorganic site structure”, since we have ultimately only one real website, but the webmaster tried to make it look like several independent websites, each specialized in its own niche.

Note that we will be looking for malicious intent before flagging sites in violation of our “inorganic site structure” policy. We acknowledge that duplicate content is unavoidable (e.g. HTTP vs. HTTPS), however there are simple ways to declare one website or destination as the source of truth, whether it’s

redirecting duplicate pages with HTTP 301 or adding canonical tags pointing to the destination. On the other hand, violators will generally implement none of these, or will instead use sneaky redirects.

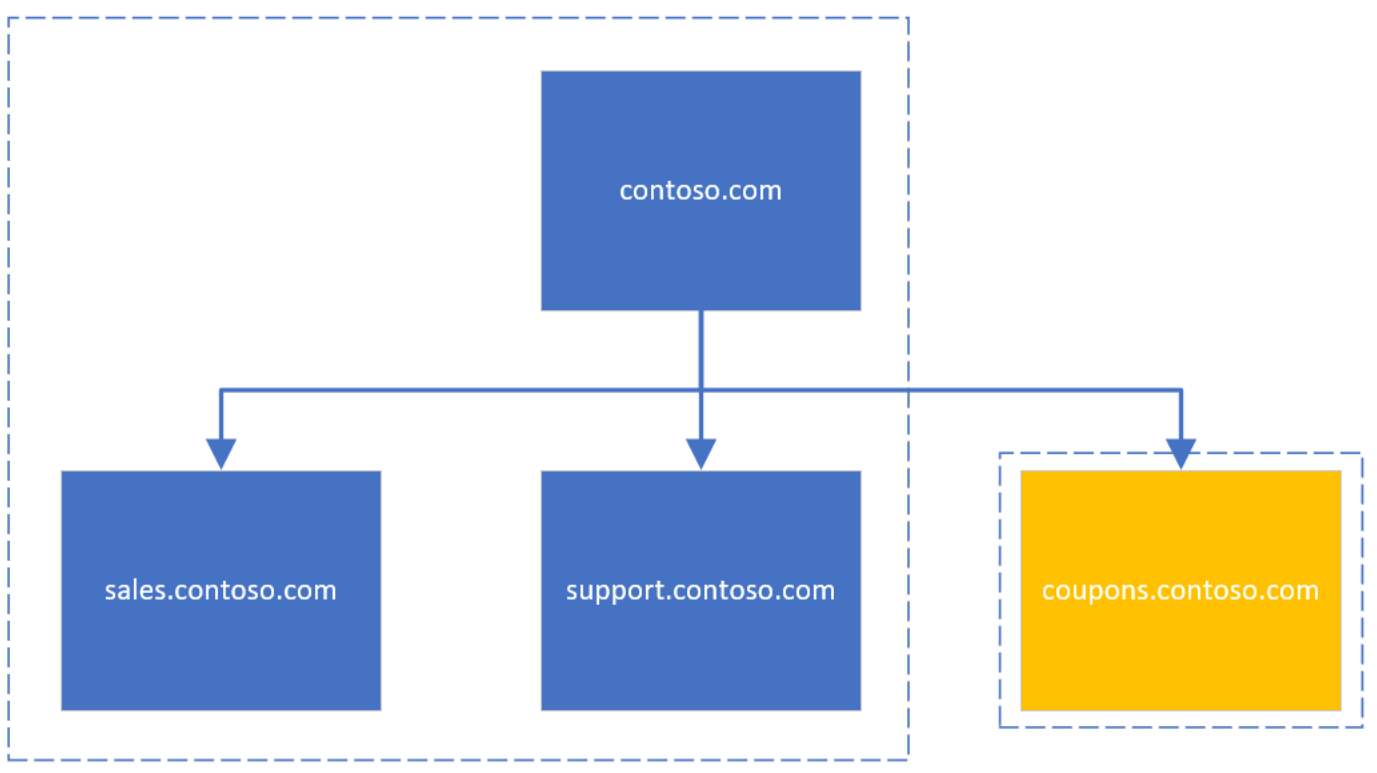

Subdomain or subfolder leasing

Over the past few months, we heard concerns from the SEO community around the growing practice of hosting third-party content or letting a third party operate a designated subdomain or subfolder, generally in exchange for compensation. This practice, which some people call “subdomain (or subfolder) leasing”, tends to blur website boundaries. Most of the domain is a single website except for a single subdomain or subfolder, which is a separate website operated by a third party.

In most cases that we reviewed, the subdomain had very little visibility for direct navigation from the main website. Concretely, there were very few links from the main domain to the subdomain and these links were generally tucked all the way at the bottom of the main domain pages or in other obscure places. Therefore, the intent was clearly to benefit from site-level signals, even though the content on the subdomain had very little to do with the content on the rest of the domain.

![Fig. 5 – The domain is mostly a single website, to the exception of one subdomain.]() Fig. 5 – The domain is mostly a single website, to the exception of one subdomain.

Fig. 5 – The domain is mostly a single website, to the exception of one subdomain.

Some people in the SEO community argue that it’s fair game for a website to monetize their reputation by letting a third party buy and operate from a subdomain. However, in this case the practice equates to buying ranking signals, which is not much different from buying links.

Therefore, we decided to consider “subdomain leasing” a violation of our “inorganic site structure” policy when it is clearly used to bring a completely unrelated third-party service into the website boundary, for the sole purpose of leaking site-level signals to that service. In most cases, the penalties issued for that violation would apply only to the leased subdomain, not the root domain.

Your responsibility as domain owner

This article is also an opportunity to remind domain owners that they are ultimately responsible for the content hosted under their domain, regardless of the website boundaries that we identify. This is particularly true when subdomains or subfolders are operated by different entities.

While clear website boundaries will prevent negative signals due to a single bad actor from leaking to other content hosted under the same domain, the overall domain reputation will be affected if a disproportionate number of websites end up in violation of our webmaster guidelines. Taking an extreme case, if you offer free hosting on your subdomains and 95% of your subdomains are flagged as spam, we will expand penalties to the entire domain, even if the root website itself is not spam.

Another unfortunate case is hacked sites. Once a website is compromised, it is typical for hackers to create subfolders or subdirectories containing spam content, sometimes unbeknownst to the legitimate owner. When we detect this case, we generally penalize the entire website until it is clean.

Learning from you

If you believe you have been unfairly penalized, you can contact

Bing Webmaster Support and file a reconsideration request. Please document the situation as thoroughly and transparently as possible, listing all the domains involved. However, we cannot guarantee that we will lift the penalty.

Your feedback is valuable to us! Clarifying our existing duplicate content policy and our stance on subdomain leasing were two feedbacks we heard from the SEO community, and we hope this article achieved both. As we are in the middle of a major update of the

Bing Webmaster Guidelines, please feel free to reach out to us and share feedback on

Twitter or

Facebook.

Thank you,

Frederic Dubut and the Bing Webmaster Tools Team

but it also took time for Core adoption so I wasn’t aware of really anyone who was using internal only configs. Also we changed the way config values were read – we no longer had the “one API reads them all” so today on Core where the “official configs” are specified via the runtimeconfig.json, you’d need to use a different API and specify the name in the json and the name for the env var if you want to read from both.

but it also took time for Core adoption so I wasn’t aware of really anyone who was using internal only configs. Also we changed the way config values were read – we no longer had the “one API reads them all” so today on Core where the “official configs” are specified via the runtimeconfig.json, you’d need to use a different API and specify the name in the json and the name for the env var if you want to read from both.