.NET Core 3.0 Preview 8 is now available and it includes a bunch of new updates to ASP.NET Core and Blazor.

Here’s the list of what’s new in this preview:

- Project template updates

- Cleaned up top-level templates in Visual Studio

- Angular template updated to Angular 8

- Blazor templates renamed and simplified

- Razor Class Library template replaces the Blazor Class Library template

- Case-sensitive component binding

- Improved reconnection logic for Blazor Server apps

NavLink component updated to handle additional attributes- Culture aware data binding

- Automatic generation of backing fields for

@ref

- Razor Pages support for

@attribute

- New networking primitives for non-HTTP Servers

- Unix domain socket support for the Kestrel Sockets transport

- gRPC support for CallCredentials

- ServiceReference tooling in Visual Studio

- Diagnostics improvements for gRPC

Please see the release notes for additional details and known issues.

Get started

To get started with ASP.NET Core in .NET Core 3.0 Preview 8 install the .NET Core 3.0 Preview 8 SDK

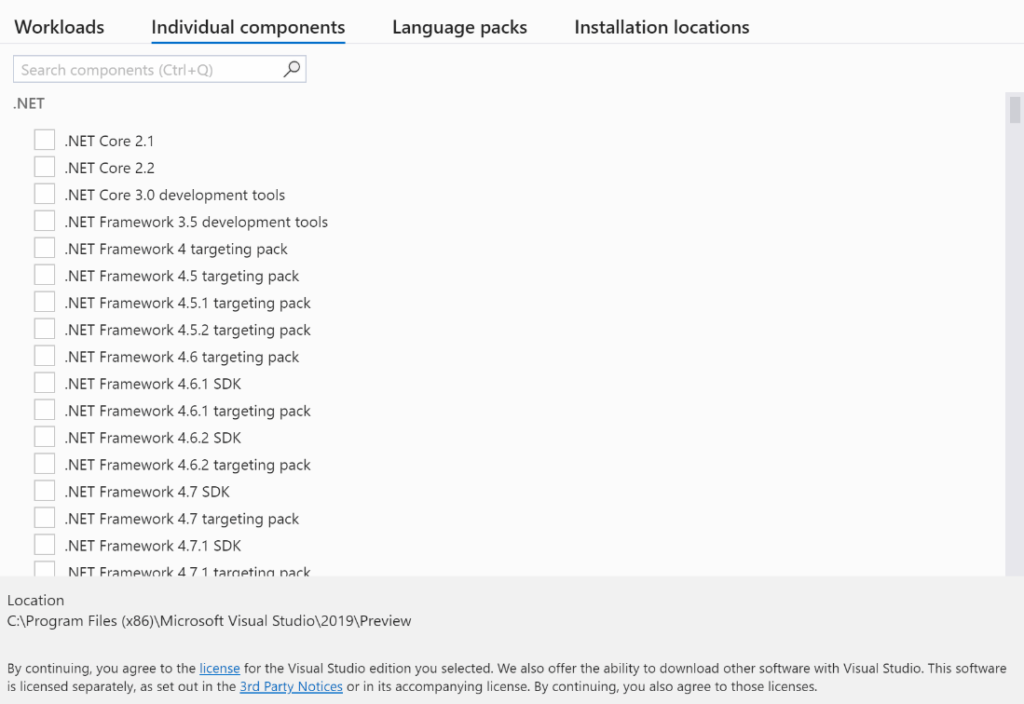

If you’re on Windows using Visual Studio, install the latest preview of Visual Studio 2019.

.NET Core 3.0 Preview 8 requires Visual Studio 2019 16.3 Preview 2 or later

To install the latest Blazor WebAssembly template also run the following command:

dotnet new -i Microsoft.AspNetCore.Blazor.Templates::3.0.0-preview8.19405.7

Upgrade an existing project

To upgrade an existing an ASP.NET Core app to .NET Core 3.0 Preview 8, follow the migrations steps in the ASP.NET Core docs.

Please also see the full list of breaking changes in ASP.NET Core 3.0.

To upgrade an existing ASP.NET Core 3.0 Preview 7 project to Preview 8:

- Update Microsoft.AspNetCore.* package references to 3.0.0-preview8.19405.7.

- In Razor components rename

OnInit to OnInitialized and OnInitAsync to OnInitializedAsync.

- In Blazor apps, update Razor component parameters to be public, as non-public component parameters now result in an error.

- In Blazor WebAssembly apps that make use of the

HttpClient JSON helpers, add a package reference to Microsoft.AspNetCore.Blazor.HttpClient.

- On Blazor form components remove use of

Id and Class parameters and instead use the HTML id and class attributes.

- Rename

ElementRef to ElementReference.

- Remove backing field declarations when using

@ref or specify the @ref:suppressField parameter to suppress automatic backing field generation.

- Update calls to

ComponentBase.Invoke to call ComponentBase.InvokeAsync.

- Update uses of

ParameterCollection to use ParameterView.

- Update uses of

IComponent.Configure to use IComponent.Attach.

- Remove use of namespace

Microsoft.AspNetCore.Components.Layouts.

You should hopefully now be all set to use .NET Core 3.0 Preview 8.

Project template updates

Cleaned up top-level templates in Visual Studio

Top level ASP.NET Core project templates in the “Create a new project” dialog in Visual Studio no longer appear duplicated in the “Create a new ASP.NET Core web application” dialog. The following ASP.NET Core templates now only appear in the “Create a new project” dialog:

- Razor Class Library

- Blazor App

- Worker Service

- gRPC Service

Angular template updated to Angular 8

The Angular template for ASP.NET Core 3.0 has now been updated to use Angular 8.

Blazor templates renamed and simplified

We’ve updated the Blazor templates to use a consistent naming style and to simplify the number of templates:

- The “Blazor (server-side)” template is now called “Blazor Server App”. Use

blazorserver to create a Blazor Server app from the command-line.

- The “Blazor” template is now called “Blazor WebAssembly App”. Use

blazorwasm to create a Blazor WebAssembly app from the command-line.

- To create an ASP.NET Core hosted Blazor WebAssembly app, select the “ASP.NET Core hosted” option in Visual Studio, or pass the

--hosted on the command-line

![Create a new Blazor app]()

dotnet new blazorwasm --hosted

Razor Class Library template replaces the Blazor Class Library template

The Razor Class Library template is now setup for Razor component development by default and the Blazor Class Library template has been removed. New Razor Class Library projects target .NET Standard so they can be used from both Blazor Server and Blazor WebAssembly apps. To create a new Razor Class Library template that targets .NET Core and supports Pages and Views instead, select the “Support pages and views” option in Visual Studio, or pass the --support-pages-and-views option on the command-line.

![Razor Class Library for Pages and Views]()

dotnet new razorclasslib --support-pages-and-views

Case-sensitive component binding

Components in .razor files are now case-sensitive. This enables some useful new scenarios and improves diagnostics from the Razor compiler.

For example, the Counter has a button for incrementing the count that is styled as a primary button. What if we wanted a Button component that is styled as a primary button by default? Creating a component named Button in previous Blazor releases was problematic because it clashed with the button HTML element, but now that component matching is case-sensitive we can create our Button component and use it in Counter without issue.

Button.razor

<button class="btn btn-primary" @attributes="AdditionalAttributes" @onclick="OnClick">@ChildContent</button>

@code {

[Parameter]

public EventCallback<UIMouseEventArgs> OnClick { get; set; }

[Parameter]

public RenderFragment ChildContent { get; set; }

[Parameter(CaptureUnmatchedValues = true)]

public IDictionary<string, object> AdditionalAttributes { get; set; }

}

Counter.razor

@page "/counter"

<h1>Counter</h1>

<p>Current count: @currentCount</p>

<Button OnClick="IncrementCount">Click me</Button>

@code {

int currentCount = 0;

void IncrementCount()

{

currentCount++;

}

}

Notice that the Button component is pascal cased, which is the typical style for .NET types. If we instead try to name our component button we get a warning that components cannot start with a lowercase letter due to the potential conflicts with HTML elements.

![Lowercase component warning]()

We can move the Button component into a Razor Class Library so that it can be reused in other projects. We can then reference the Razor Class Library from our web app. The Button component will now have the default namespace of the Razor Class Library. The Razor compiler will resolve components based on the in scope namespaces. If we try to use our Button component without adding a using statement for the requisite namespace, we now get a useful error message at build time.

![Unrecognized component error]()

NavLink component updated to handle additional attributes

The built-in NavLink component now supports passing through additional attributes to the rendered anchor tag. Previously NavLink had specific support for the href and class attributes, but now you can specify any additional attribute you’d like. For example, you can specify the anchor target like this:

<NavLink href="my-page" target="_blank">My page</NavLink>

which would render:

<a href="my-page" target="_blank" rel="noopener noreferrer">My page</a>

Improved reconnection logic for Blazor Server apps

Blazor Server apps require a live connection to the server in order to function. If the connection or the server-side state associated with it is lost, then the the client will be unable to function. Blazor Server apps will attempt to reconnect to the server in the event of an intermittent connection loss and this logic has been made more robust in this release. If the reconnection attempts fail before the network connection can be reestablished, then the user can still attempt to retry the connection manually by clicking the provided “Retry” button.

However, if the server-side state associated with the connect was also lost (e.g. the server was restarted) then clients will still be unable to connect. A common situation where this occurs is during development in Visual Studio. Visual Studio will watch the project for file changes and then rebuild and restart the app as changes occur. When this happens the server-side state associated with any connected clients is lost, so any attempt to reconnect with that state will fail. The only option is to reload the app and establish a new connection.

New in this release, the app will now also suggest that the user reload the browser when the connection is lost and reconnection fails.

![Reload prompt]()

Culture aware data binding

Data-binding support (@bind) for <input> elements is now culture-aware. Data bound values will be formatted for display and parsed using the current culture as specified by the System.Globalization.CultureInfo.CurrentCulture property. This means that @bind will work correctly when the user’s desired culture has been set as the current culture, which is typically done using the ASP.NET Core localization middleware (see Localization).

You can also manually specify the culture to use for data binding using the new @bind:culture parameter, where the value of the parameter is a CultureInfo instance. For example, to bind using the invariant culture:

<input @bind="amount" @bind:culture="CultureInfo.InvariantCulture" />

The <input type="number" /> and <input type="date" /> field types will by default use CultureInfo.InvariantCulture and the formatting rules appropriate for these field types in the browser. These field types cannot contain free-form text and have a look and feel that is controller by the browser.

Other field types with specific formatting requirements include datetime-local, month, and week. These field types are not supported by Blazor at the time of writing because they are not supported by all major browsers.

Data binding now also includes support for binding to DateTime?, DateTimeOffset, and DateTimeOffset?.

Automatic generation of backing fields for @ref

The Razor compiler will now automatically generate a backing field for both element and component references when using @ref. You no longer need to define these fields manually:

<button @ref="myButton" @onclick="OnClicked">Click me</button>

<Counter @ref="myCounter" IncrementAmount="10" />

@code {

void OnClicked() => Console.WriteLine($"I have a {myButton} and myCounter.IncrementAmount={myCounter.IncrementAmount}");

}

In some cases you may still want to manually create the backing field. For example, declaring the backing field manually is required when referencing generic components. To suppress backing field generation specify the @ref:suppressField parameter.

Razor Pages support for @attribute

Razor Pages now support the new @attribute directive for adding attributes to the generate page class.

For example, you can now specify that a page requires authorization like this:

@page

@attribute [Microsoft.AspNetCore.Authorization.Authorize]

<h1>Authorized users only!<h1>

<p>Hello @User.Identity.Name. You are authorized!</p>

New networking primitives for non-HTTP Servers

As part of the effort to decouple the components of Kestrel, we are introducing new networking primitives allowing you to add support for non-HTTP protocols.

You can bind to an endpoint (System.Net.EndPoint) by calling Bind on an IConnectionListenerFactory. This returns a IConnectionListener which can be used to accept new connections. Calling AcceptAsync returns a ConnectionContext with details on the connection. A ConnectionContext is similar to HttpContext except it represents a connection instead of an HTTP request and response.

The example below show a simple TCP Echo server hosted in a BackgroundService built using these new primitives.

public class TcpEchoServer : BackgroundService

{

private readonly ILogger<TcpEchoServer> _logger;

private readonly IConnectionListenerFactory _factory;

private IConnectionListener _listener;

public TcpEchoServer(ILogger<TcpEchoServer> logger, IConnectionListenerFactory factory)

{

_logger = logger;

_factory = factory;

}

protected override async Task ExecuteAsync(CancellationToken stoppingToken)

{

_listener = await _factory.BindAsync(new IPEndPoint(IPAddress.Loopback, 6000), stoppingToken);

while (true)

{

var connection = await _listener.AcceptAsync(stoppingToken);

// AcceptAsync will return null upon disposing the listener

if (connection == null)

{

break;

}

// In an actual server, ensure all accepted connections are disposed prior to completing

_ = Echo(connection, stoppingToken);

}

}

public override async Task StopAsync(CancellationToken cancellationToken)

{

await _listener.DisposeAsync();

}

private async Task Echo(ConnectionContext connection, CancellationToken stoppingToken)

{

try

{

var input = connection.Transport.Input;

var output = connection.Transport.Output;

await input.CopyToAsync(output, stoppingToken);

}

catch (OperationCanceledException)

{

_logger.LogInformation("Connection {ConnectionId} cancelled due to server shutdown", connection.ConnectionId);

}

catch (Exception e)

{

_logger.LogError(e, "Connection {ConnectionId} threw an exception", connection.ConnectionId);

}

finally

{

await connection.DisposeAsync();

_logger.LogInformation("Connection {ConnectionId} disconnected", connection.ConnectionId);

}

}

}

Unix domain socket support for the Kestrel Sockets transport

We’ve updated the default sockets transport in Kestrel to add support Unix domain sockets (on Linux, macOS, and Windows 10, version 1803 and newer). To bind to a Unix socket, you can call the ListenUnixSocket() method on KestrelServerOptions.

public static IHostBuilder CreateHostBuilder(string[] args) =>

Host.CreateDefaultBuilder(args)

.ConfigureWebHostDefaults(webBuilder =>

{

webBuilder

.ConfigureKestrel(o =>

{

o.ListenUnixSocket("/var/listen.sock");

})

.UseStartup<Startup>();

});

gRPC support for CallCredentials

In preview8, we’ve added support for CallCredentials allowing for interoperability with existing libraries like Grpc.Auth that rely on CallCredentials.

Diagnostics improvements for gRPC

Support for Activity

The gRPC client and server use Activities to annotate inbound/outbound requests with baggage containing information about the current RPC operation. This information can be accessed by telemetry frameworks for distributed tracing and by logging frameworks.

EventCounters

The newly introduced Grpc.AspNetCore.Server and Grpc.Net.Client providers now emit the following event counters:

total-callscurrent-callscalls-failedcalls-deadline-exceededmessages-sentmessages-receivedcalls-unimplemented

You can use the dotnet counters global tool to view the metrics emitted.

dotnet counters monitor -p <PID> Grpc.AspNetCore.Server

ServiceReference tooling in Visual Studio

We’ve added support in Visual Studio that makes it easier to manage references to other Protocol Buffers documents and Open API documents.

![ServiceReference]()

When pointed at OpenAPI documents, the ServiceReference experience in Visual Studio can generated typed C#/TypeScript clients using NSwag.

When pointed at Protocol Buffer (.proto) files, the ServiceReference experience will Visual Studio can generate gRPC service stubs, gRPC clients, or message types using the Grpc.Tools package.

SignalR User Survey

We’re interested in how you use SignalR and the Azure SignalR Service, and your opinions on SignalR features. To that end, we’ve created a survey we’d like to invite any SignalR customer to complete. If you’re interested in talking to one of the engineers from the SignalR team about your ideas or feedback, we’ve provided an opportunity to enter your contact information in the survey, but that information is not required. Help us plan the next wave of SignalR features by providing your feedback in the survey.

Give feedback

We hope you enjoy the new features in this preview release of ASP.NET Core and Blazor! Please let us know what you think by filing issues on GitHub.

Thanks for trying out ASP.NET Core and Blazor!

The post ASP.NET Core and Blazor updates in .NET Core 3.0 Preview 8 appeared first on ASP.NET Blog.