Animated character recognition, multilingual speech transcription and more now available

At Microsoft, our mission is to empower every person and organization on the planet to achieve more. The media industry exemplifies this mission. We live in an age where more content is being created and consumed in more ways and on more devices than ever. At IBC 2019, we’re delighted to share the latest innovations we’ve been working on and how they can help transform your media workflows. Read on to learn more, or join our product teams and partners at Hall 1 Booth C27 at the RAI in Amsterdam from September 13th to 17th.

Video Indexer adds support for animation and multilingual content

We made our award winning Azure Media Services Video Indexer generally available at IBC last year, and this year it’s getting even better. Video Indexer automatically extracts insights and metadata such as spoken words, faces, emotions, topics and brands from media files, without you needing to be a machine learning expert. Our latest announcements include previews for two highly requested and differentiated capabilities for animated character recognition and multilingual speech transcription, as well as several additions to existing models available today in Video Indexer.

Animated character recognition

Animated content or cartoons are one of the most popular content types, but standard AI vision models built for human faces do not work well with them, especially if the content has characters without human features. In this new preview solution, Video Indexer joins forces with Microsoft’s Azure Custom Vision service to provide a new set of models that automatically detect and group animated characters and allow customers to then tag and recognize them easily via integrated custom vision models. These models are integrated into a single pipeline, which allows anyone to use the service without any previous machine learning skills. The results are available through the no-code Video Indexer portal or the REST API for easy integration into your own applications.

![Spongebob Image of the AMS Video Indexer recognizing animated characters.]()

We built these animated character models in collaboration with select customers who contributed real animated content for training and testing. The value of the new functionality is well articulated by Andy Gutteridge, Senior Director, Studio & Post-Production Technology at Viacom International Media Networks, which was one of the data contributors: “The addition of reliable AI-based animated detection will enable us to discover and catalogue character metadata from our content library quickly and efficiently. Most importantly, it will give our creative teams the power to find the content they want instantly, minimize time spent on media management and allow them to focus on the creative.”

To get started with animated character recognition, please visit our documentation page.

Multilingual identification and transcription

Some media assets like news, current affairs, and interviews contain audio with speakers using different languages. Most existing speech-to-text capabilities require the audio recognition language to be specified in advance, which is an obstacle to transcribing multilingual videos. Our new automatic spoken language identification for multiple content feature leverages machine learning technology to identify the different languages used in a media asset. Once detected, each language segment undergoes an automatic transcription process in the language identified, and all segments are integrated back together into one transcription file consisting of multiple languages.

![740 Blog An image of the Video Indexer screen, showing multilingual transcription.]()

The resulting transcription is available both as part of Video Indexer JSON output and as closed-caption files. The output transcript is also integrated with Azure Search, allowing you to immediately search across videos for the different language segments. Furthermore, the multi-language transcription is available as part of the Video Indexer portal experience so you can view the transcript and identified language by time, or jump to the specific places in the video for each language and see the multi-language transcription as captions as a video is played. You can also translate the output back-and-forth into 54 different languages via the portal and API.

Read more about the new multilingual option and how to use it in Video Indexer in our documentation.

Additional updated and improved models

We are also adding new and improving existing models within Video Indexer, including:

Extraction of people and locations entities

We’ve extended our current brand detection capabilities to also incorporate well-known names and locations, such as the Eiffel Tower in Paris or Big Ben in London. When these appear in the generated transcript or on-screen via optical character recognition (OCR), a specific insight is created. With this new capability, you can review and search by all people, locations and brands that appeared in the video, along with their timeframes, description, and a link to our Bing search engine for more information.

![entities final Azure Video Indexer entity extraction in the insight pane.]()

Editorial shot detection model

This new feature adds a set of “tags” in the metadata attached to an individual shot in the insights JSON to represent its editorial type (such as wide shot, medium shot, close up, extreme close up, two shot, multiple people, outdoor and indoor, etc.). These shot-type characteristics come in handy when editing videos into clips and trailers as well as when searching for a specific style of shots for artistic purposes.

![Editorial shot type example. Azure Video Indexer editorial shot type example.]()

Explore and read more about editorial shot type detection in Video Indexer.

Expanded granularity of IPTC mapping

Our topic inferencing model determines the topic of videos based on transcription, optical character recognition (OCR), and detected celebrities even if the topic is not explicitly stated. We map these inferred topics to four different taxonomies: Wikipedia, Bing, IPTC, and IAB. With this enhancement, we now include level-2 IPTC taxonomy.

Tanking advantage of these enhancements is as easy as re-indexing your current Video Indexer library.

New live streaming functionality

We are also introducing two new live-streaming capabilities in preview to Azure Media Services.

Live transcription supercharges your live events with AI

Using Azure Media Services to stream a live event, you can now get an output stream that includes an automatically generated text track in addition to the video and audio content. This text track is created using AI-based live transcription of the audio of the contribution feed. Custom methods are applied before and after speech-to-text conversion in order to improve the end-user experience. The text track is packaged into IMSC1, TTML, or WebVTT, depending on whether you are delivering in DASH, HLS CMAF, or HLS TS.

Live linear encoding for 24/7 over-the-top (OTT) channels

Using our v3 APIs, you can create, manage, and stream live channels for OTT services and take advantage of all the other features of Azure Media Services like live to video on demand (VOD), packaging, and digital rights management (DRM).

To try these preview features, please visit the Azure Media Services Community page.

![IBC blog An image showing live transcription signal flow.]()

New packaging features

Support for audio description tracks

Broadcast content frequently has an audio track that contains verbal explanations of on-screen action in addition to the normal program audio. This makes programming more accessible for vision-impaired viewers, especially if the content is highly visual. The new audio description feature enables a customer to annotate one of the audio tracks to be the audio description (AD) track, which in turn can be used by players to make the AD track discoverable by viewers.

ID3 metadata insertion

In order to signal the insertion of advertisements or custom metadata events on a client player, broadcasters often make use of timed metadata embedded within the video. In addition to SCTE-35 signaling modes, we now also support ID3v2 or other custom schemas defined by an application developer for use by the client application.

Microsoft Azure partners demonstrate end-to-end solutions

Bitmovin is debuting its Bitmovin Video Encoding and Bitmovin Video Player on Microsoft Azure. Customers can now use these encoding and player solutions on Azure and leverage advanced functionality such as 3-pass encoding, AV1/VVC codec support, multi-language closed captions, and pre-integrated video analytics for QoS, ad, and video tracking.

Evergent is showing its User Lifecycle Management Platform on Azure. As a leading provider of revenue and customer lifecycle management solutions, Evergent leverages Azure AI to enable premium entertainment service providers to improve customer acquisition and retention by generating targeted packages and offers at critical points in the customer lifecycle.

Haivision will showcase its intelligent media routing cloud service, SRT Hub, that helps customers transform end-to-end workflows starting with ingest using Azure Data Box Edge and media workflow transformation using Hublets from Avid, Telestream, Wowza and Cinegy, and Make.tv.

SES has developed a suite of broadcast-grade media services on Azure for its satellite connectivity and managed media services customers. SES will show solutions for fully managed playout services, including master playout, localized playout and ad detection and replacement, and 24x7 high-quality multichannel live encoding on Azure.

SyncWords is making its caption automation technology and user-friendly cloud-based tools available on Azure. These offerings will make it easier for media organizations to add automated closed captioning and foreign language subtitling capabilities to their real-time and offline video processing workflows on Azure.

Global design and technology services company Tata Elxsi has integrated TEPlay, its OTT platform SaaS, with Azure Media Services to deliver OTT content from the cloud. Tata Elxsi has also brought FalconEye, its quality of experience (QoE) monitoring solution that focuses on actionable metrics and analytics, to Microsoft Azure.

Verizon Media is making its streaming platform available in beta on Azure. Verizon Media Platform is an enterprise-grade managed OTT solution including DRM, ad insertion, one-to-one personalized sessions, dynamic content replacement, and video delivery. The integration brings simplified workflows, global support and scale, and access to a range of unique capabilities available on Azure.

Many of our partners will also be presenting in the theater at our booth, so make sure you stop by to catch them!

Short distance, big impact

We are proud to support the 4K 4Charity Fun Run as a gold sponsor. This is a running and walking event held at various media industry events since 2014, and it raises awareness and financial support for non-profits focused on increased diversity and inclusion. Register and come join us on Saturday, September 14th, at 7:30am at the Amstelpark in Amsterdam.

Don’t miss out

There’s a lot more going on at the Microsoft booth this IBC. To learn more, read about how the community of our customers and partners are innovating on Azure in media and entertainment, or better yet come and join us in Hall 1 Booth C27. If you won’t be there, we’re sorry we’ll miss you, but you can try Video Indexer and Azure Media Services for yourself by following the links.

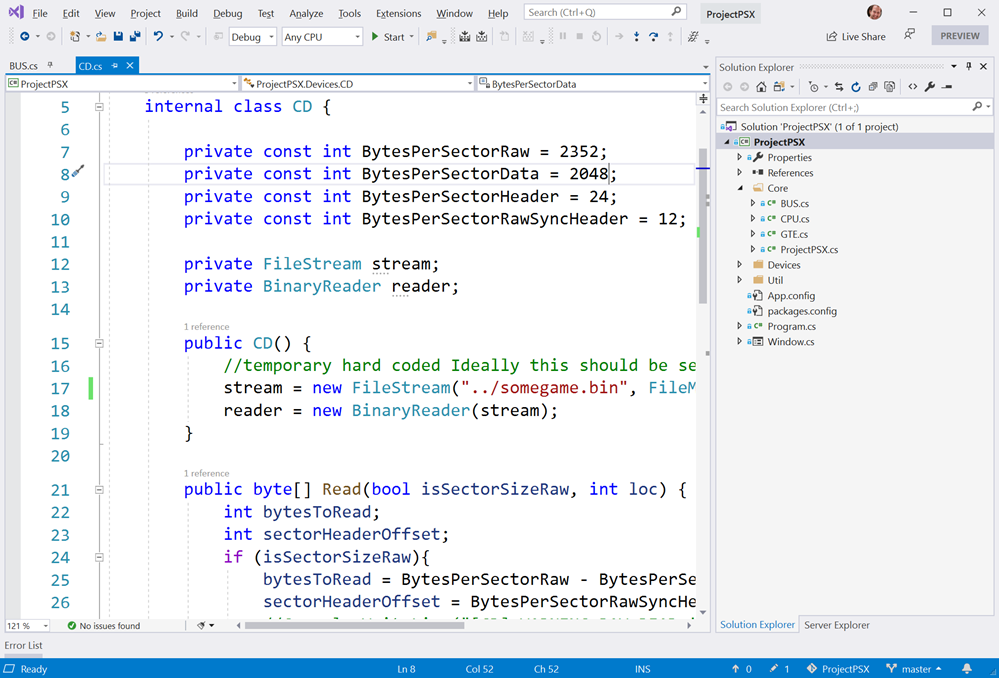

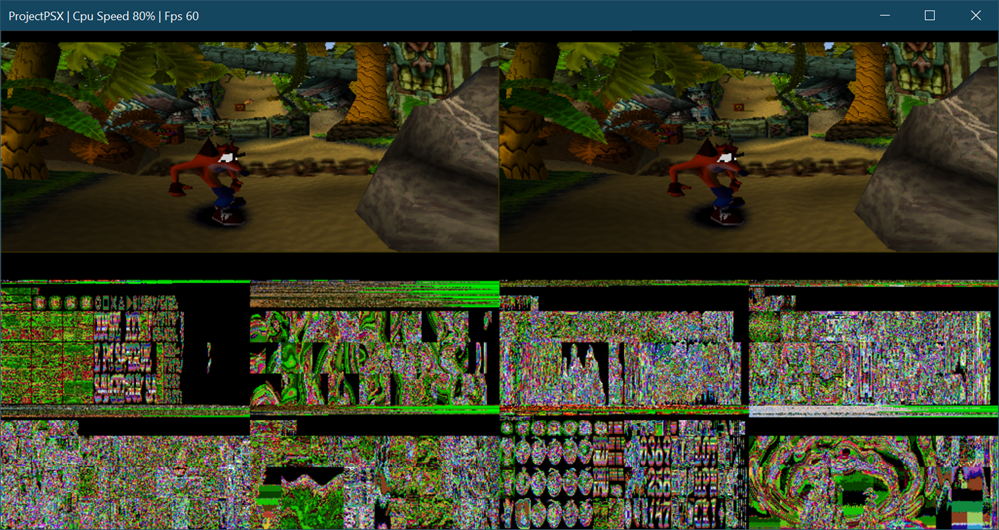

I'm pretty happy with Windows 10 as my primary development box. It can do most anything I want, run

I'm pretty happy with Windows 10 as my primary development box. It can do most anything I want, run _7febd92c-9e16-4307-9241-9a05d14a7a1c.png)

_50860895-2f3a-4653-a30c-c4ece99d4caa.png)

_2652af4a-be53-466c-aeef-cf01a975c912.png)

_9b5b3af4-3c0f-4d1d-9dc8-1fc1704f2a00.png)

_7e171933-ff3c-4f3f-bfc2-cfb7c99a9c0a.png)