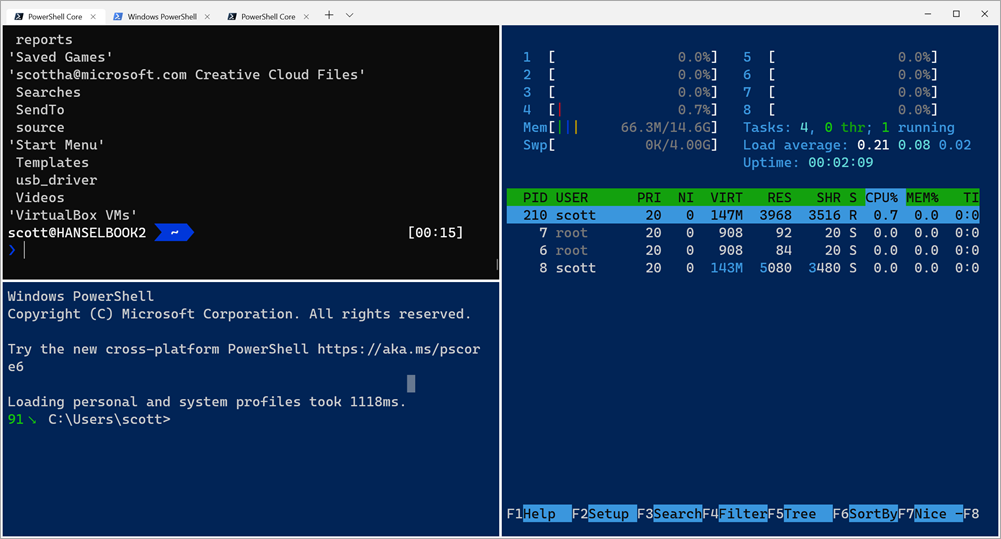

I posted recently about What's the difference between a console, a terminal, and a shell? The world of Windows is interesting - and a little weird and unfamiliar to non-Windows people. You might use Ubuntu or Mac and you've picked your shell like zsh or bash or pwsh, but then you come to Windows and we're hopping between shells (and now operating systems with WSL!) on a tab by tab basis.

I posted recently about What's the difference between a console, a terminal, and a shell? The world of Windows is interesting - and a little weird and unfamiliar to non-Windows people. You might use Ubuntu or Mac and you've picked your shell like zsh or bash or pwsh, but then you come to Windows and we're hopping between shells (and now operating systems with WSL!) on a tab by tab basis.

If you're using a Windows shell like PowerShell because you like it's .NET Core based engine and powerful scripting language, you might still miss common *nix shell commands like ls, grep, sed and more.

No matter what shell you're using in Windows (powershell, yori, cmd, whatever) you can always call into your default Ubuntu instance with "wsl command" so "wsl ls" or "wsl grep" but it'd be nice to make those more naturally and comfortably integrated.

Now there's a new series of "function wrappers" that make Linux commands available directly in PowerShell so you can easily transition between multiple environments.

This might seem weird but it allows us to create amazing piped commands that move in and out of Windows and Linux, PowerShell and bash. It's actually pretty amazing and very natural if you, like me, are non-denominational in your choice of operating system and preferred shell.

These function wrappers are very neatly designed and even expose TAB completion across operating systems! That means I can type Linux commands in PowerShell and TAB completion comes along!

It's super easy to set up. From Mike Battista's Github

- Install PowerShell Core

- Install the Windows Subsystem for Linux (WSL)

- Install the WslInterop module with

Install-Module WslInterop - Import commands with

Import-WslCommandeither from your profile for persistent access or on demand when you need a command (e.g.Import-WslCommand "awk", "emacs", "grep", "head", "less", "ls", "man", "sed", "seq", "ssh", "tail", "vim")

You'll do your Install-Module just one, and then run notepad $profile and add just a that single last line. Make sure you change it to expose the WSL/Linux commands that you want. Once you're done, you can just open PowerShell Core and mix and match your commands!

From the blog, "With these function wrappers in place, we can now call our favorite Linux commands in a more natural way without having to prefix them with wsl or worry about how Windows paths are translated to WSL paths:"

man bashless -i $profile.CurrentUserAllHostsls -Al C:Windows | lessgrep -Ein error *.logtail -f *.log

It's a really genius thing and kudos to Mike for sharing it with us! Go try it now. https://github.com/mikebattista/PowerShell-WSL-Interop

Sponsor: Like C#? We do too! That’s why we've developed a fast, smart, cross-platform .NET IDE which gives you even more coding power. Clever code analysis, rich code completion, instant search and navigation, an advanced debugger... With JetBrains Rider, everything you need is at your fingertips. Code C# at the speed of thought on Linux, Mac, or Windows. Try JetBrains Rider today!

© 2019 Scott Hanselman. All rights reserved.

with Raspberry’s & Pipelines

with Raspberry’s & Pipelines